Tutorial 1 : Opening a window

- Introduction

- Prerequisites

- Forget Everything

- Building the tutorials

- Running the tutorials

- How to follow these tutorials

- Opening a window

Introduction

Welcome to the first tutorial !

Before jumping into OpenGL, you will first learn how to build the code that goes with each tutorial, how to run it, and most importantly, how to play with the code yourself.

Prerequisites

No special prerequisite is needed to follow these tutorials. Experience with any programming langage ( C, Java, Lisp, Javascript, whatever ) is better to fully understand the code, but not needed; it will merely be more complicated to learn two things at the same time.

All tutorials are written in “Easy C++” : Lots of effort has been made to make the code as simple as possible. No templates, no classes, no pointers. This way, you will be able to understand everything even if you only know Java.

Forget Everything

You don’t have to know anything, but you have to forget everything you know about OpenGL. If you know about something that looks like glBegin(), forget it. Here you will learn modern OpenGL (OpenGL 3 and 4) , and many online tutorials teach “old” OpenGL (OpenGL 1 and 2). So forget everything you might know before your brain melts from the mix.

Building the tutorials

All tutorials can be built on Windows, Linux and Mac. For all these platforms, the procedure is roughly the same :

- Update your drivers !! doooo it. You’ve been warned.

- Download a compiler, if you don’t already have one.

- Install CMake

- Download the source code of the tutorials

- Generate a project using CMake

- Build the project using your compiler

- Play with the samples !

Detailed procedures will now be given for each platform. Adaptations may be required. If unsure, read the instruction for Windows and try to adapt them.

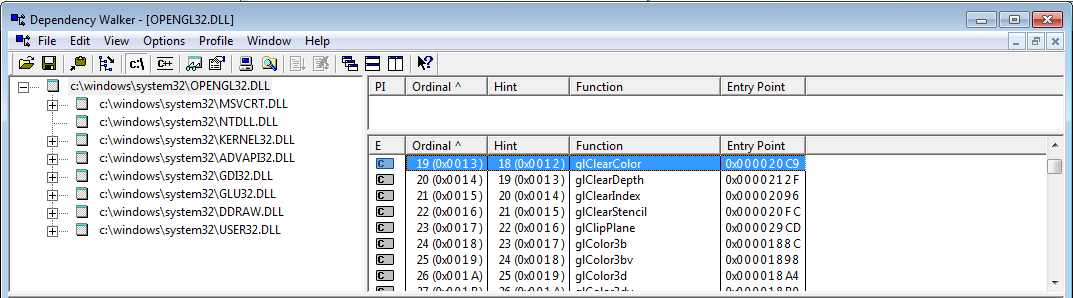

Building on Windows

- Updating your drivers should be easy. Just go to NVIDIA’s or AMD’s website and download the drivers. If unsure about your GPU model : Control Panel -> System and Security -> System -> Device Manager -> Display adapter. If you have an integrated Intel GPU, drivers are usually provided by your OEM (Dell, HP, …).

- We suggest using Visual Studio 2017 Express for Desktop as a compiler. You can download it for free here. MAKE SURE YOU CHOOSE CUSTOM INSTALLATION AND CHECK C++. If you prefer using MinGW, we recommend using Qt Creator. Install whichever you want. Subsequent steps will be explained with Visual Studio, but should be similar with any other IDE.

- Download CMake from here and install it

- Download the source code and unzip it, for instance in C:\Users\XYZ\Projects\OpenGLTutorials\ .

-

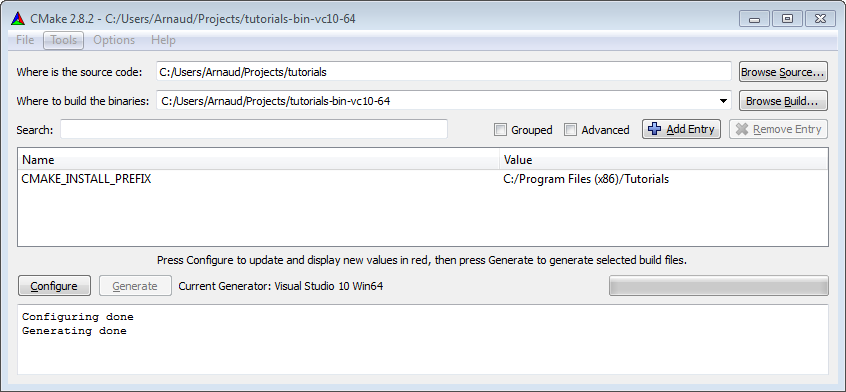

Launch CMake. In the first line, navigate to the unzipped folder. If unsure, choose the folder that contains the CMakeLists.txt file. In the second line, enter where you want all the compiler’s stuff to live. For instance, you can choose C:\Users\XYZ\Projects\OpenGLTutorials-build-Visual2017-64bits\, or C:\Users\XYZ\Projects\OpenGLTutorials\build\Visual2017-32bits. Notice that it can be anywhere, not necessarily in the same folder.

- Click on the Configure button. Since this is the first time you configure the project, CMake will ask you which compiler you would like to use. Choose wisely depending on step 1. If you have a 64 bit Windows, you can choose 64 bits; if you don’t know, choose 32 bits.

- Click on Configure until all red lines disappear. Click on Generate. Your Visual Studio project is now created. You can now forget about CMake.

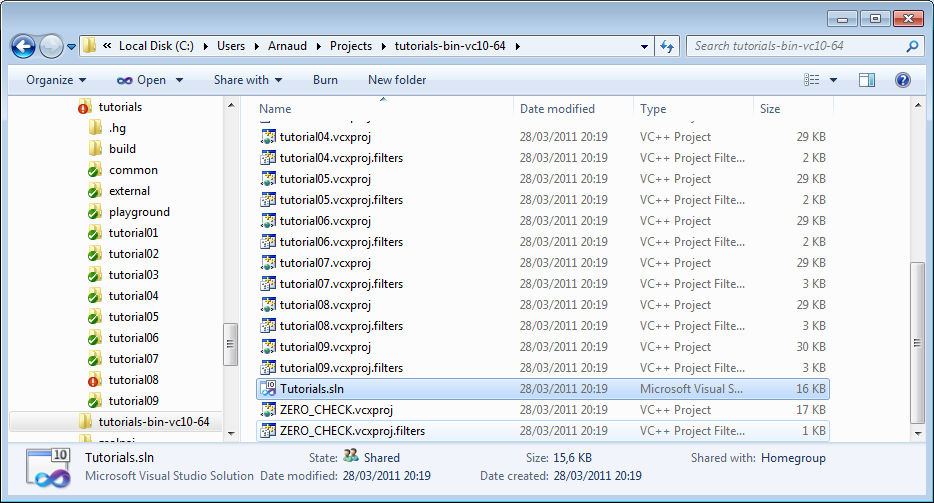

- Open C:\Users\XYZ\Projects\OpenGLTutorials-build-Visual2010-32bits. You will see a Tutorials.sln file : open it with Visual Studio.

In the Build menu, click Build All. Every tutorial and dependency will be compiled. Each executable will also be copied back into C:\Users\XYZ\Projects\OpenGLTutorials\ . Hopefuly no error occurs.

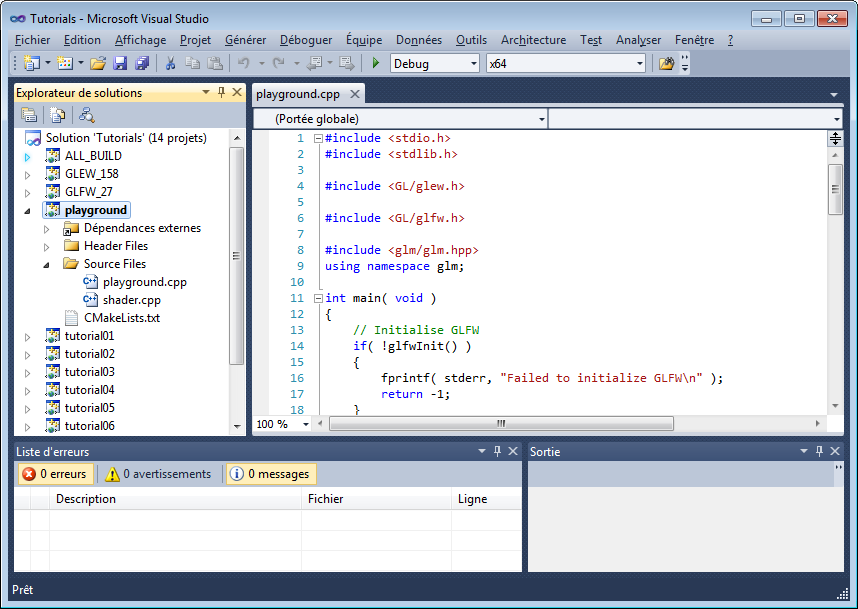

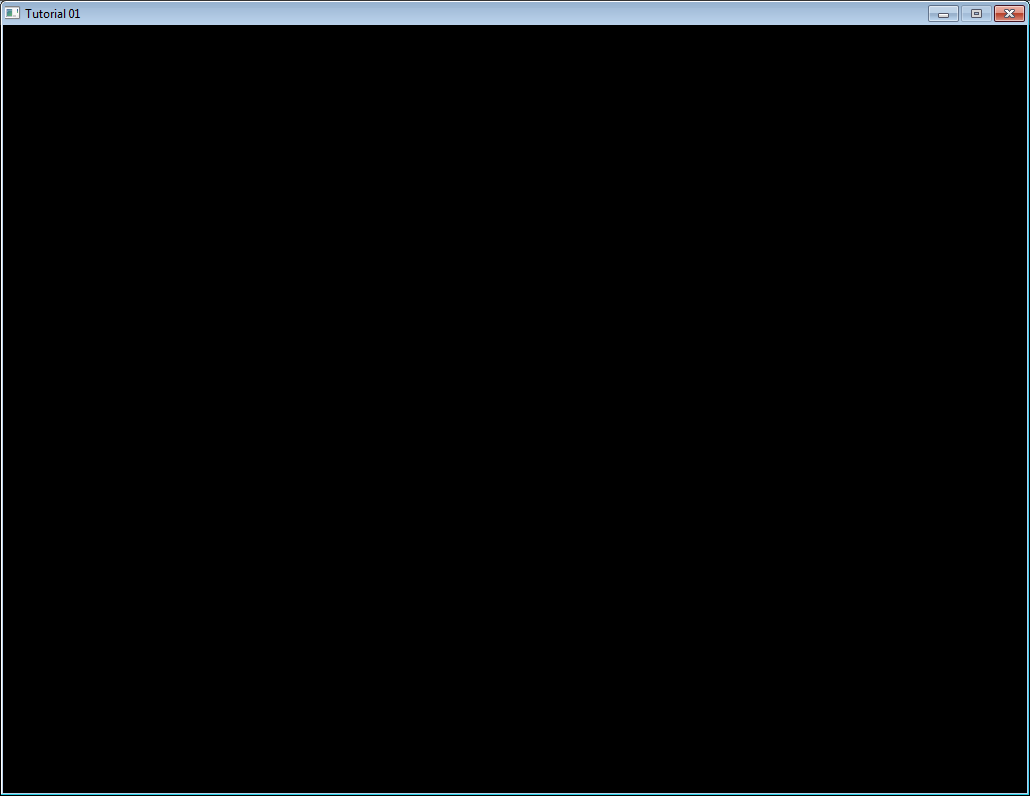

- Open C:\Users\XYZ\Projects\OpenGLTutorials\playground, and launch playground.exe. A black window should appear.

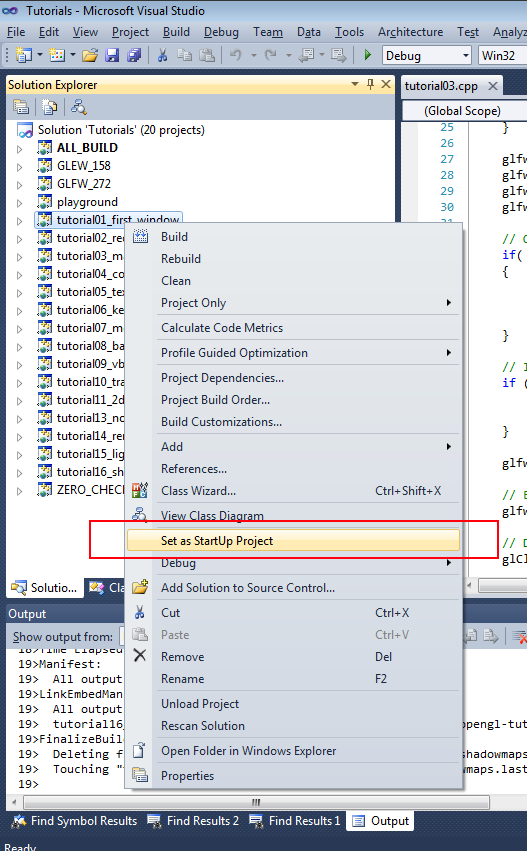

You can also launch any tutorial from inside Visual Studio. Right-click on Playground once, “Choose as startup project”. You can now debug the code by pressing F5.

Building on Linux

They are so many Linux variants out there that it’s impossible to list every possible platform. Adapt if required, and don’t hesitate to read your distribution’s documentation.

- Install the latest drivers. We highly recommend the closed-source binary drivers. It’s not GNU or whatever, but they work. If your distribution doesn’t provide an automatic install, try Ubuntu’s guide.

- Install all needed compilers, tools & libs. Complete list is : cmake make g++ libx11-dev libxi-dev libgl1-mesa-dev libglu1-mesa-dev libxrandr-dev libxext-dev libxcursor-dev libxinerama-dev libxi-dev . Use

sudo apt-get install *****orsu && yum install ******. - Download the source code and unzip it, for instance in ~/Projects/OpenGLTutorials/

-

cd in ~/Projects/OpenGLTutorials/ and enter the following commands :

- mkdir build

- cd build

-

cmake ..

- A makefile has been created in the build/ directory.

- type “make all”. Every tutorial and dependency will be compiled. Each executable will also be copied back into ~/Projects/OpenGLTutorials/ . Hopefuly no error occurs.

- Open ~/Projects/OpenGLTutorials/playground, and launch ./playground. A black window should appear.

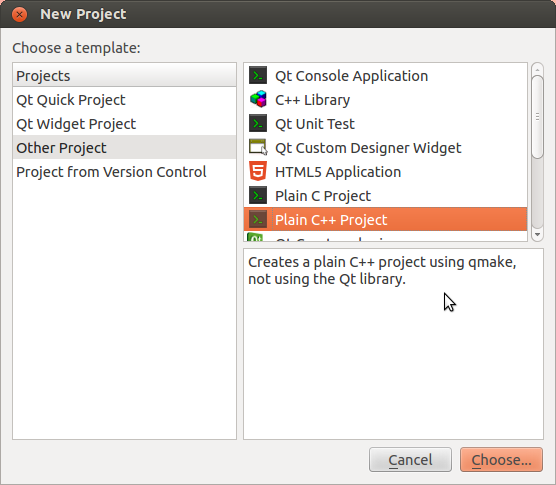

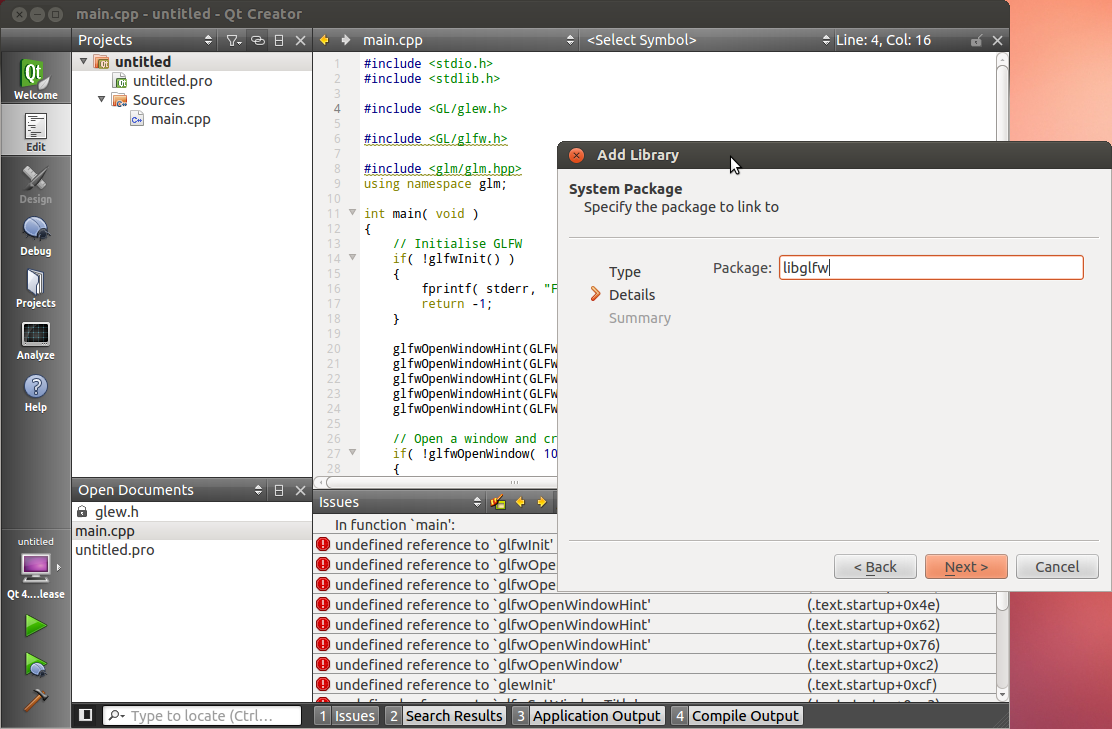

Note that you really should use an IDE like Qt Creator. In particular, this one has built-in support for CMake, and it will provide a much nicer experience when debugging. Here are the instructions for QtCreator :

- In QtCreator, go to File->Tools->Options->Compile&Execute->CMake

- Set the path to CMake. This is most probably /usr/bin/cmake

- File->Open Project; Select tutorials/CMakeLists.txt

- Select a build directory, preferably outside the tutorials folder

- Optionally set -DCMAKE_BUILD_TYPE=Debug in the parameters box. Validate.

- Click on the hammer on the bottom. The tutorials can now be launched from the tutorials/ folder.

- To run the tutorials from QtCreator, click on Projects->Execution parameters->Working Directory, and select the directory where the shaders, textures & models live. Example for tutorial 2 : ~/opengl-tutorial/tutorial02_red_triangle/

Building on Mac

The procedure is very similar to Windows’ (Makefiles are also supported, but won’t be explained here) :

- Install XCode from the Mac App Store

- Download CMake, and install the .dmg . You don’t need to install the command-line tools.

- Download the source code and unzip it, for instance in ~/Projects/OpenGLTutorials/ .

- Launch CMake (Applications->CMake). In the first line, navigate to the unzipped folder. If unsure, choose the folder that contains the CMakeLists.txt file. In the second line, enter where you want all the compiler’s stuff to live. For instance, you can choose ~/Projects/OpenGLTutorials_bin_XCode/. Notice that it can be anywhere, not necessarily in the same folder.

- Click on the Configure button. Since this is the first time you configure the project, CMake will ask you which compiler you would like to use. Choose Xcode.

- Click on Configure until all red lines disappear. Click on Generate. Your Xcode project is now created. You can forget about CMake.

- Open ~/Projects/OpenGLTutorials_bin_XCode/ . You will see a Tutorials.xcodeproj file : open it.

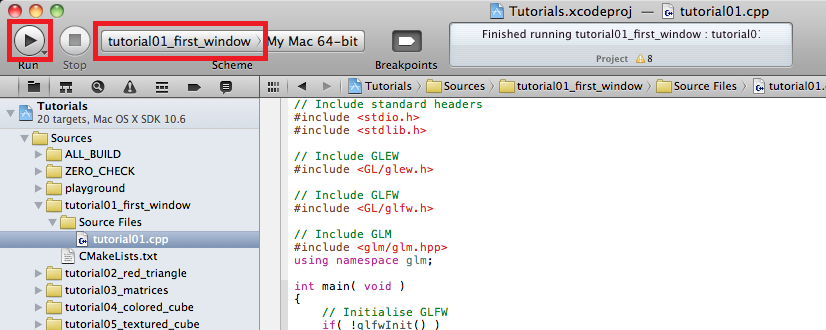

- Select the desired tutorial to run in Xcode’s Scheme panel, and use the Run button to compile & run :

Note for Code::Blocks

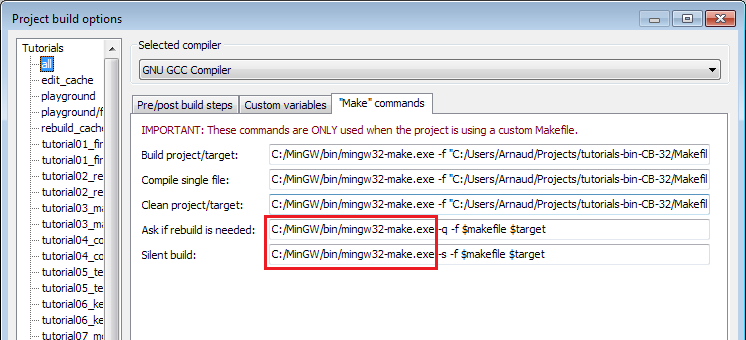

Due to 2 bugs (one in C::B, one in CMake), you have to edit the command-line in Project->Build Options->Make commands, as follows :

You also have to setup the working directory yourself : Project->Properties -> Build targets -> tutorial N -> execution working dir ( it’s src_dir/tutorial_N/ ).

Running the tutorials

You should run the tutorials directly from the right directory : simply double-click on the executable. If you like command line best, cd to the right directory.

If you want to run the tutorials from the IDE, don’t forget to read the instructions above to set the correct working directory.

How to follow these tutorials

Each tutorial comes with its source code and data, which can be found in tutorialXX/. However, you will never modify these projects : they are for reference only. Open playground/playground.cpp, and tweak this file instead. Torture it in any way you like. If you are lost, simply cut’n paste any tutorial in it, and everything should be back to normal.

We will provide snippets of code all along the tutorials. Don’t hesitate to cut’n paste them directly in the playground while you’re reading : experimentation is good. Avoid simply reading the finished code, you won’t learn a lot this way. Even with simple cut’n pasting, you’ll get your boatload of problems.

Opening a window

Finally ! OpenGL code ! Well, not really. Many tutorials show you the “low level” way to do things, so that you can see that no magic happens. But the “open a window” part is actually very boring and useless, so we will use GLFW, an external library, to do this for us instead. If you really wanted to, you could use the Win32 API on Windows, the X11 API on Linux, and the Cocoa API on Mac; or use another high-level library like SFML, FreeGLUT, SDL, … see the Links page.

Ok, let’s go. First, we’ll have to deal with dependencies : we need some basic stuff to display messages in the console :

// Include standard headers

#include <stdio.h>

#include <stdlib.h>

First, GLEW. This one actually is a little bit magic, but let’s leave this for later.

// Include GLEW. Always include it before gl.h and glfw3.h, since it's a bit magic.

#include <GL/glew.h>

We decided to let GLFW handle the window and the keyboard, so let’s include it too :

// Include GLFW

#include <GLFW/glfw3.h>

We don’t actually need this one right now, but this is a library for 3D mathematics. It will prove very useful soon. There is no magic in GLM, you can write your own if you want; it’s just handy. The “using namespace” is there to avoid typing “glm::vec3”, but “vec3” instead.

// Include GLM

#include <glm/glm.hpp>

using namespace glm;

If you cut’n paste all these #include’s in playground.cpp, the compiler will complain that there is no main() function. So let’s create one :

int main(){

First thing to do it to initialize GLFW :

// Initialise GLFW

glewExperimental = true; // Needed for core profile

if( !glfwInit() )

{

fprintf( stderr, "Failed to initialize GLFW\n" );

return -1;

}

We can now create our first OpenGL window !

glfwWindowHint(GLFW_SAMPLES, 4); // 4x antialiasing

glfwWindowHint(GLFW_CONTEXT_VERSION_MAJOR, 3); // We want OpenGL 3.3

glfwWindowHint(GLFW_CONTEXT_VERSION_MINOR, 3);

glfwWindowHint(GLFW_OPENGL_FORWARD_COMPAT, GL_TRUE); // To make MacOS happy; should not be needed

glfwWindowHint(GLFW_OPENGL_PROFILE, GLFW_OPENGL_CORE_PROFILE); // We don't want the old OpenGL

// Open a window and create its OpenGL context

GLFWwindow* window; // (In the accompanying source code, this variable is global for simplicity)

window = glfwCreateWindow( 1024, 768, "Tutorial 01", NULL, NULL);

if( window == NULL ){

fprintf( stderr, "Failed to open GLFW window. If you have an Intel GPU, they are not 3.3 compatible. Try the 2.1 version of the tutorials.\n" );

glfwTerminate();

return -1;

}

glfwMakeContextCurrent(window); // Initialize GLEW

glewExperimental=true; // Needed in core profile

if (glewInit() != GLEW_OK) {

fprintf(stderr, "Failed to initialize GLEW\n");

return -1;

}

Build this and run. A window should appear, and be closed right away. Of course! We need to wait until the user hits the Escape key :

// Ensure we can capture the escape key being pressed below

glfwSetInputMode(window, GLFW_STICKY_KEYS, GL_TRUE);

do{

// Clear the screen. It's not mentioned before Tutorial 02, but it can cause flickering, so it's there nonetheless.

glClear( GL_COLOR_BUFFER_BIT );

// Draw nothing, see you in tutorial 2 !

// Swap buffers

glfwSwapBuffers(window);

glfwPollEvents();

} // Check if the ESC key was pressed or the window was closed

while( glfwGetKey(window, GLFW_KEY_ESCAPE ) != GLFW_PRESS &&

glfwWindowShouldClose(window) == 0 );

And this concludes our first tutorial ! In Tutorial 2, you will learn how to actually draw a triangle.

Tutorial 2 : The first triangle

This will be another long tutorial.

OpenGL 3 makes it easy to write complicated stuff, but at the expense that drawing a simple triangle is actually quite difficult.

Don’t forget to cut’n paste the code on a regular basis.

If the program crashes at startup, you’re probably running from the wrong directory. Read CAREFULLY the first tutorial and the FAQ on how to configure Visual Studio !

The VAO

I won’t dig into details now, but you need to create a Vertex Array Object and set it as the current one :

GLuint VertexArrayID;

glGenVertexArrays(1, &VertexArrayID);

glBindVertexArray(VertexArrayID);

Do this once your window is created (= after the OpenGL Context creation) and before any other OpenGL call.

If you really want to know more about VAOs, there are a few other tutorials out there, but this is not very important.

Screen Coordinates

A triangle is defined by three points. When talking about “points” in 3D graphics, we usually use the word “vertex” ( “vertices” on the plural ). A vertex has 3 coordinates : X, Y and Z. You can think about these three coordinates in the following way :

- X in on your right

- Y is up

- Z is towards your back (yes, behind, not in front of you)

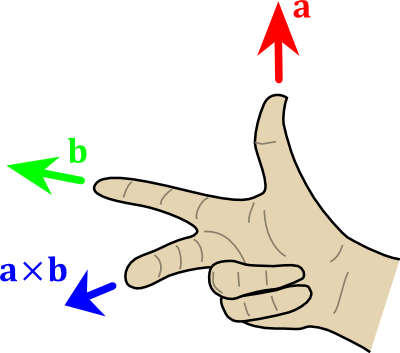

But here is a better way to visualize this : use the Right Hand Rule

- X is your thumb

- Y is your index

- Z is your middle finger. If you put your thumb to the right and your index to the sky, it will point to your back, too.

Having the Z in this direction is weird, so why is it so ? Short answer : because 100 years of Right Hand Rule Math will give you lots of useful tools. The only downside is an unintuitive Z.

On a side note, notice that you can move your hand freely : your X, Y and Z will be moving, too. More on this later.

So we need three 3D points in order to make a triangle ; let’s go :

// An array of 3 vectors which represents 3 vertices

static const GLfloat g_vertex_buffer_data[] = {

-1.0f, -1.0f, 0.0f,

1.0f, -1.0f, 0.0f,

0.0f, 1.0f, 0.0f,

};

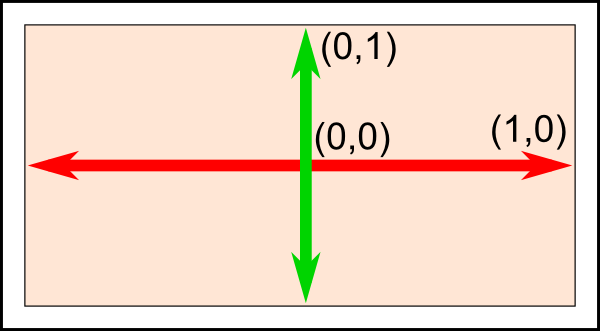

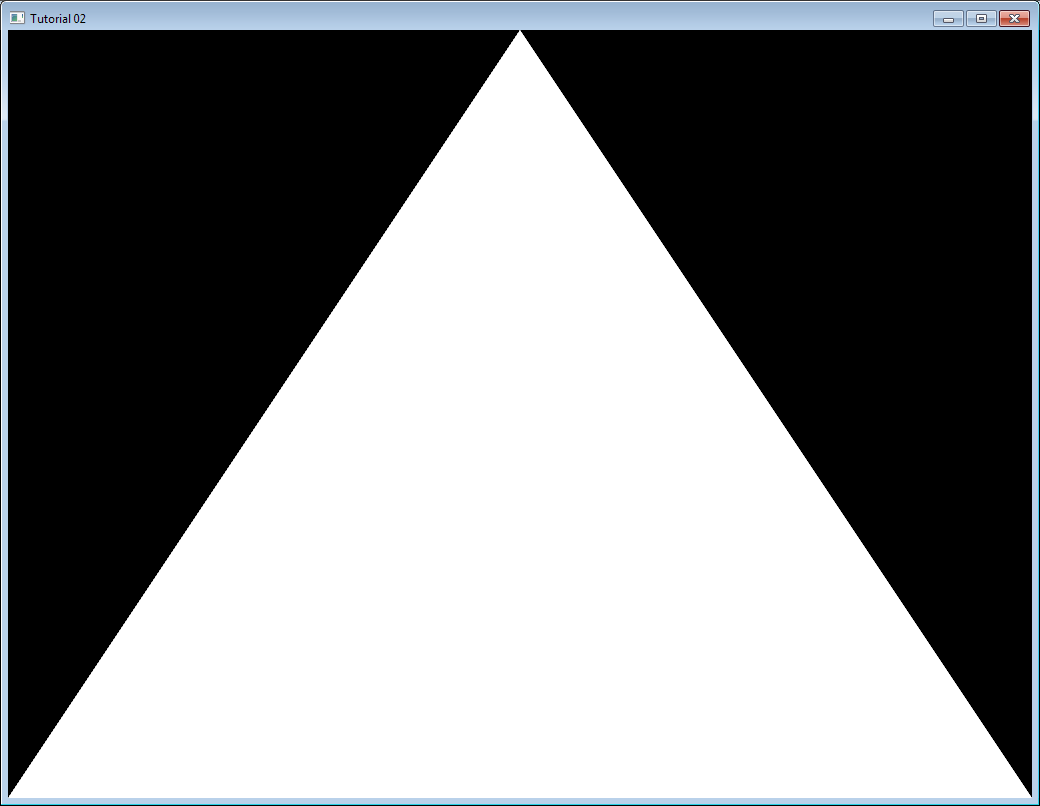

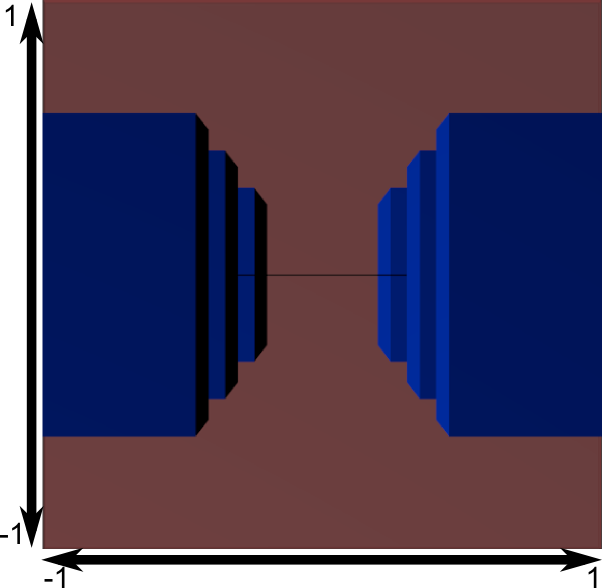

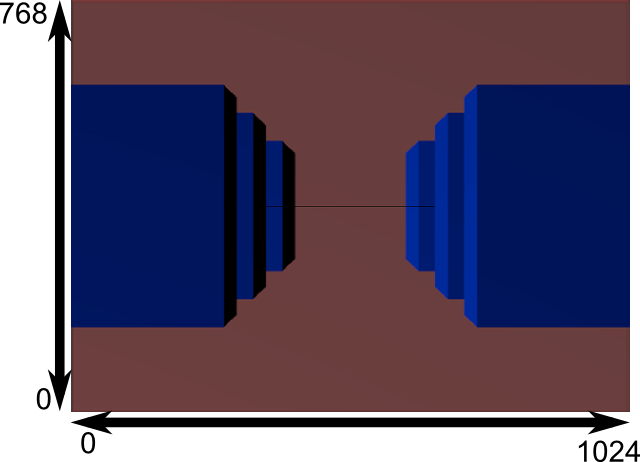

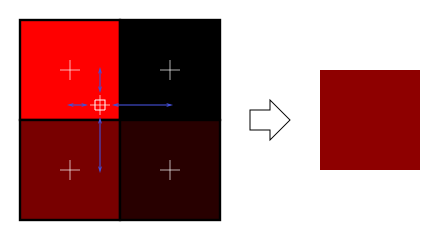

The first vertex is (-1,-1,0). This means that unless we transform it in some way, it will be displayed at (-1,-1) on the screen. What does this mean ? The screen origin is in the middle, X is on the right, as usual, and Y is up. This is what it gives on a wide screen :

This is something you can’t change, it’s built in your graphics card. So (-1,-1) is the bottom left corner of your screen. (1,-1) is the bottom right, and (0,1) is the middle top. So this triangle should take most of the screen.

Drawing our triangle

The next step is to give this triangle to OpenGL. We do this by creating a buffer:

// This will identify our vertex buffer

GLuint vertexbuffer;

// Generate 1 buffer, put the resulting identifier in vertexbuffer

glGenBuffers(1, &vertexbuffer);

// The following commands will talk about our 'vertexbuffer' buffer

glBindBuffer(GL_ARRAY_BUFFER, vertexbuffer);

// Give our vertices to OpenGL.

glBufferData(GL_ARRAY_BUFFER, sizeof(g_vertex_buffer_data), g_vertex_buffer_data, GL_STATIC_DRAW);

This needs to be done only once.

Now, in our main loop, where we used to draw “nothing”, we can draw our magnificent triangle :

// 1st attribute buffer : vertices

glEnableVertexAttribArray(0);

glBindBuffer(GL_ARRAY_BUFFER, vertexbuffer);

glVertexAttribPointer(

0, // attribute 0. No particular reason for 0, but must match the layout in the shader.

3, // size

GL_FLOAT, // type

GL_FALSE, // normalized?

0, // stride

(void*)0 // array buffer offset

);

// Draw the triangle !

glDrawArrays(GL_TRIANGLES, 0, 3); // Starting from vertex 0; 3 vertices total -> 1 triangle

glDisableVertexAttribArray(0);

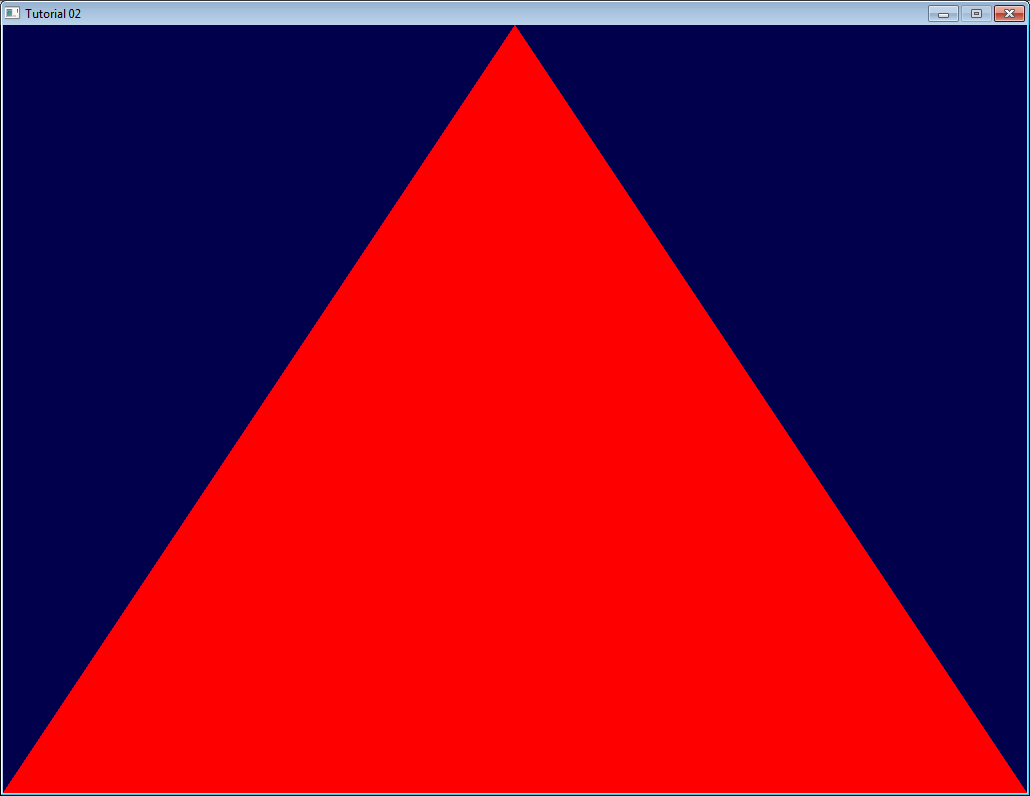

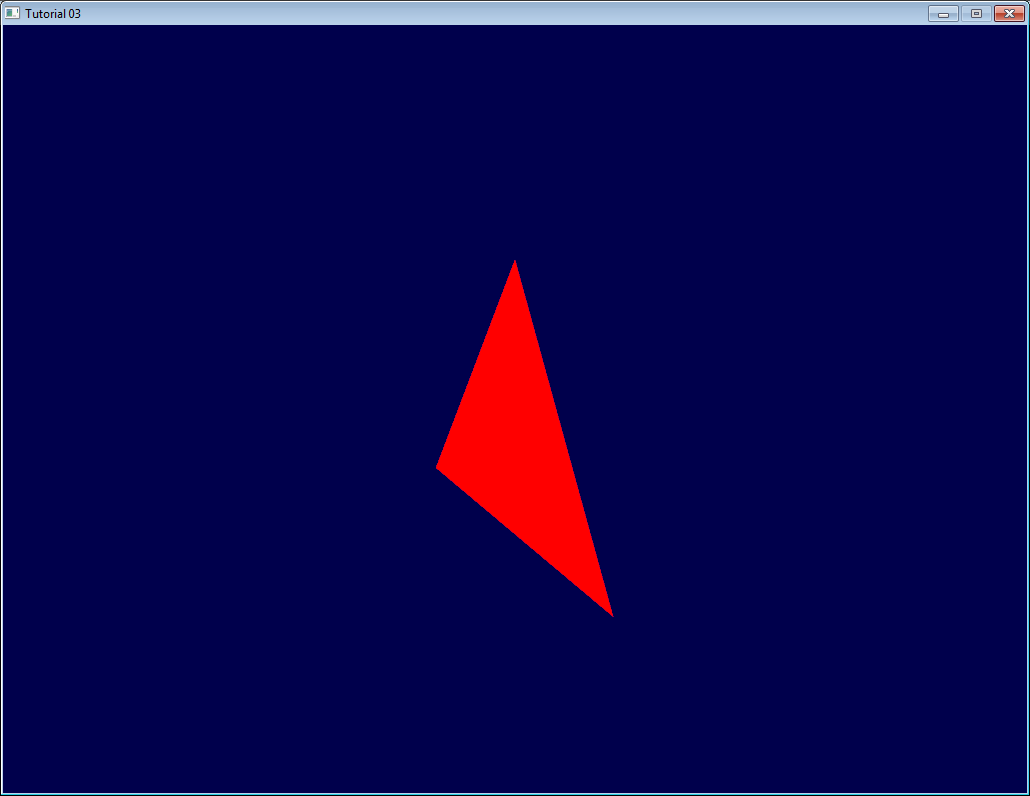

If you’re lucky, you can see the result in white. (Don’t panic if you don’t some systems require a shader to show anything) :

Now this is some boring white. Let’s see how we can improve it by painting it in red. This is done by using something called shaders.

Shaders

Shader Compilation

In the simplest possible configuration, you will need two shaders : one called Vertex Shader, which will be executed for each vertex, and one called Fragment Shader, which will be executed for each sample. And since we use 4x antialising, we have 4 samples in each pixel.

Shaders are programmed in a language called GLSL : GL Shader Language, which is part of OpenGL. Unlike C or Java, GLSL has to be compiled at run time, which means that each and every time you launch your application, all your shaders are recompiled.

The two shaders are usually in separate files. In this example, we have SimpleFragmentShader.fragmentshader and SimpleVertexShader.vertexshader . The extension is irrelevant, it could be .txt or .glsl .

So here’s the code. It’s not very important to fully understand it, since you often do this only once in a program, so comments should be enough. Since this function will be used by all other tutorials, it is placed in a separate file : common/loadShader.cpp . Notice that just as buffers, shaders are not directly accessible : we just have an ID. The actual implementation is hidden inside the driver.

GLuint LoadShaders(const char * vertex_file_path,const char * fragment_file_path){

// Create the shaders

GLuint VertexShaderID = glCreateShader(GL_VERTEX_SHADER);

GLuint FragmentShaderID = glCreateShader(GL_FRAGMENT_SHADER);

// Read the Vertex Shader code from the file

std::string VertexShaderCode;

std::ifstream VertexShaderStream(vertex_file_path, std::ios::in);

if(VertexShaderStream.is_open()){

std::stringstream sstr;

sstr << VertexShaderStream.rdbuf();

VertexShaderCode = sstr.str();

VertexShaderStream.close();

}else{

printf("Impossible to open %s. Are you in the right directory ? Don't forget to read the FAQ !\n", vertex_file_path);

getchar();

return 0;

}

// Read the Fragment Shader code from the file

std::string FragmentShaderCode;

std::ifstream FragmentShaderStream(fragment_file_path, std::ios::in);

if(FragmentShaderStream.is_open()){

std::stringstream sstr;

sstr << FragmentShaderStream.rdbuf();

FragmentShaderCode = sstr.str();

FragmentShaderStream.close();

}

GLint Result = GL_FALSE;

int InfoLogLength;

// Compile Vertex Shader

printf("Compiling shader : %s\n", vertex_file_path);

char const * VertexSourcePointer = VertexShaderCode.c_str();

glShaderSource(VertexShaderID, 1, &VertexSourcePointer , NULL);

glCompileShader(VertexShaderID);

// Check Vertex Shader

glGetShaderiv(VertexShaderID, GL_COMPILE_STATUS, &Result);

glGetShaderiv(VertexShaderID, GL_INFO_LOG_LENGTH, &InfoLogLength);

if ( InfoLogLength > 0 ){

std::vector<char> VertexShaderErrorMessage(InfoLogLength+1);

glGetShaderInfoLog(VertexShaderID, InfoLogLength, NULL, &VertexShaderErrorMessage[0]);

printf("%s\n", &VertexShaderErrorMessage[0]);

}

// Compile Fragment Shader

printf("Compiling shader : %s\n", fragment_file_path);

char const * FragmentSourcePointer = FragmentShaderCode.c_str();

glShaderSource(FragmentShaderID, 1, &FragmentSourcePointer , NULL);

glCompileShader(FragmentShaderID);

// Check Fragment Shader

glGetShaderiv(FragmentShaderID, GL_COMPILE_STATUS, &Result);

glGetShaderiv(FragmentShaderID, GL_INFO_LOG_LENGTH, &InfoLogLength);

if ( InfoLogLength > 0 ){

std::vector<char> FragmentShaderErrorMessage(InfoLogLength+1);

glGetShaderInfoLog(FragmentShaderID, InfoLogLength, NULL, &FragmentShaderErrorMessage[0]);

printf("%s\n", &FragmentShaderErrorMessage[0]);

}

// Link the program

printf("Linking program\n");

GLuint ProgramID = glCreateProgram();

glAttachShader(ProgramID, VertexShaderID);

glAttachShader(ProgramID, FragmentShaderID);

glLinkProgram(ProgramID);

// Check the program

glGetProgramiv(ProgramID, GL_LINK_STATUS, &Result);

glGetProgramiv(ProgramID, GL_INFO_LOG_LENGTH, &InfoLogLength);

if ( InfoLogLength > 0 ){

std::vector<char> ProgramErrorMessage(InfoLogLength+1);

glGetProgramInfoLog(ProgramID, InfoLogLength, NULL, &ProgramErrorMessage[0]);

printf("%s\n", &ProgramErrorMessage[0]);

}

glDetachShader(ProgramID, VertexShaderID);

glDetachShader(ProgramID, FragmentShaderID);

glDeleteShader(VertexShaderID);

glDeleteShader(FragmentShaderID);

return ProgramID;

}

Our Vertex Shader

Let’s write our vertex shader first. The first line tells the compiler that we will use OpenGL 3’s syntax.

#version 330 core

The second line declares the input data :

layout(location = 0) in vec3 vertexPosition_modelspace;

Let’s explain this line in detail :

- “vec3” is a vector of 3 components in GLSL. It is similar (but different) to the glm::vec3 we used to declare our triangle. The important thing is that if we use 3 components in C++, we use 3 components in GLSL too.

- “layout(location = 0)” refers to the buffer we use to feed the vertexPosition_modelspace attribute. Each vertex can have numerous attributes : A position, one or several colours, one or several texture coordinates, lots of other things. OpenGL doesn’t know what a colour is : it just sees a vec3. So we have to tell him which buffer corresponds to which input. We do that by setting the layout to the same value as the first parameter to glVertexAttribPointer. The value “0” is not important, it could be 12 (but no more than glGetIntegerv(GL_MAX_VERTEX_ATTRIBS, &v) ), the important thing is that it’s the same number on both sides.

- “vertexPosition_modelspace” could have any other name. It will contain the position of the vertex for each run of the vertex shader.

- “in” means that this is some input data. Soon we’ll see the “out” keyword.

The function that is called for each vertex is called main, just as in C :

void main(){

Our main function will merely set the vertex’ position to whatever was in the buffer. So if we gave (1,1), the triangle would have one of its vertices at the top right corner of the screen. We’ll see in the next tutorial how to do some more interesting computations on the input position.

gl_Position.xyz = vertexPosition_modelspace;

gl_Position.w = 1.0;

}

gl_Position is one of the few built-in variables : you *have *to assign some value to it. Everything else is optional; we’ll see what “everything else” means in Tutorial 4.

Our Fragment Shader

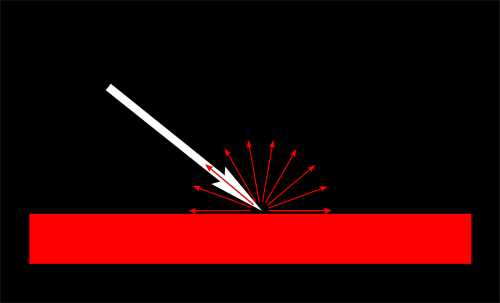

For our first fragment shader, we will do something really simple : set the color of each fragment to red. (Remember, there are 4 fragment in a pixel because we use 4x AA)

#version 330 core

out vec3 color;

void main(){

color = vec3(1,0,0);

}

So yeah, vec3(1,0,0) means red. This is because on computer screens, colour is represented by a Red, Green, and Blue triplet, in this order. So (1,0,0) means Full Red, no green and no blue.

Putting it all together

Import our LoadShaders function as the last include:

#include <common/shader.hpp>

Before the main loop, call our LoadShaders function:

// Create and compile our GLSL program from the shaders

GLuint programID = LoadShaders( "SimpleVertexShader.vertexshader", "SimpleFragmentShader.fragmentshader" );

Now inside the main loop, first clear the screen. This will change the background color to dark blue because of the previous glClearColor(0.0f, 0.0f, 0.4f, 0.0f) call:

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

and then tell OpenGL that you want to use your shader:

// Use our shader

glUseProgram(programID);

// Draw triangle...

… and presto, here’s your red triangle !

In the next tutorial we’ll learn transformations : How to setup your camera, move your objects, etc.

Tutorial 3: Matrices

- Homogeneous coordinates

- Transformation matrices

- The Model, View and Projection matrices

- Putting it all together

- Exercises

The engines don’t move the ship at all. The ship stays where it is and the engines move the universe around it.

Futurama

This is the single most important tutorial of the whole set. Be sure to read it at least eight times.

Homogeneous coordinates

Until then, we only considered 3D vertices as a (x,y,z) triplet. Let’s introduce w. We will now have (x,y,z,w) vectors.

This will be more clear soon, but for now, just remember this:

- If w == 1, then the vector (x,y,z,1) is a position in space.

- If w == 0, then the vector (x,y,z,0) is a direction.

(In fact, remember this forever.)

What difference does this make? Well, for a rotation, it doesn’t change anything. When you rotate a point or a direction, you get the same result. However, for a translation (when you move the point in a certain direction), things are different. What could mean “translate a direction”? Not much.

Homogeneous coordinates allow us to use a single mathematical formula to deal with these two cases.

Transformation matrices

An introduction to matrices

Simply put, a matrix is an array of numbers with a predefined number of rows and colums. For instance, a 2x3 matrix can look like this:

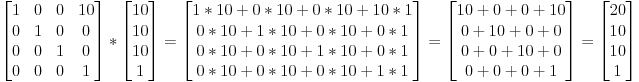

In 3D graphics we will mostly use 4x4 matrices. They will allow us to transform our (x,y,z,w) vertices. This is done by multiplying the vertex with the matrix:

Matrix x Vertex (in this order!!) = TransformedVertex

This isn’t as scary as it looks. Put your left finger on the a, and your right finger on the x. This is ax. Move your left finger to the next number (b), and your right finger to the next number (y). You’ve got by. Once again: cz. Once again: dw. ax + by + cz + dw. You’ve got your new x! Do the same for each line, and you’ll get your new (x,y,z,w) vector.

Now this is quite boring to compute, and we will do this often, so let’s ask the computer to do it instead.

In C++, with GLM:

glm::mat4 myMatrix;

glm::vec4 myVector;

// fill myMatrix and myVector somehow

glm::vec4 transformedVector = myMatrix * myVector; // Again, in this order! This is important.

In GLSL:

mat4 myMatrix;

vec4 myVector;

// fill myMatrix and myVector somehow

vec4 transformedVector = myMatrix * myVector; // Yeah, it's pretty much the same as GLM

(have you cut ‘n’ pasted this in your code? Go on, try it!)

Translation matrices

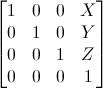

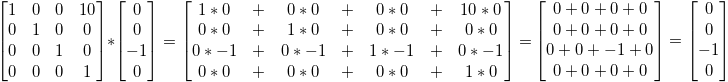

These are the most simple tranformation matrices to understand. A translation matrix look like this:

where X,Y,Z are the values that you want to add to your position.

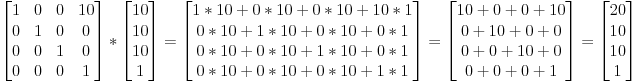

So if we want to translate the vector (10,10,10,1) of 10 units in the X direction, we get:

(do it! doooooo it)

…and we get a (20,10,10,1) homogeneous vector! Remember, the 1 means that it is a position, not a direction. So our transformation didn’t change the fact that we were dealing with a position, which is good.

Let’s now see what happens to a vector that represents a direction towards the -z axis: (0,0,-1,0)

…i.e. our original (0,0,-1,0) direction, which is great because, as I said ealier, moving a direction does not make sense.

So, how does this translate to code?

In C++, with GLM:

#include <glm/gtx/transform.hpp> // after <glm/glm.hpp>

glm::mat4 myMatrix = glm::translate(glm::mat4(), glm::vec3(10.0f, 0.0f, 0.0f));

glm::vec4 myVector(10.0f, 10.0f, 10.0f, 0.0f);

glm::vec4 transformedVector = myMatrix * myVector; // guess the result

In GLSL:

vec4 transformedVector = myMatrix * myVector;

Well, in fact, you almost never do this in GLSL. Most of the time, you use glm::translate() in C++ to compute your matrix, send it to GLSL, and do only the multiplication:

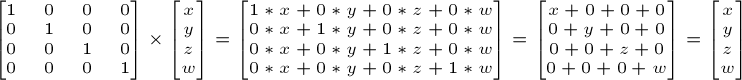

The Identity matrix

This one is special. It doesn’t do anything. But I mention it because it’s as important as knowing that multiplying A by 1.0 gives A.

In C++:

glm::mat4 myIdentityMatrix = glm::mat4(1.0f);

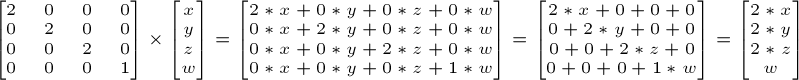

Scaling matrices

Scaling matrices are quite easy too:

So if you want to scale a vector (position or direction, it doesn’t matter) by 2.0 in all directions:

and the w still didn’t change. You may ask: what is the meaning of “scaling a direction”? Well, often, not much, so you usually don’t do such a thing, but in some (rare) cases it can be handy.

(notice that the identity matrix is only a special case of scaling matrices, with (X,Y,Z) = (1,1,1). It’s also a special case of translation matrix with (X,Y,Z)=(0,0,0), by the way)

In C++:

// Use #include <glm/gtc/matrix_transform.hpp> and #include <glm/gtx/transform.hpp>

glm::mat4 myScalingMatrix = glm::scale(glm::mat4(1), glm::vec3(2,2,2));

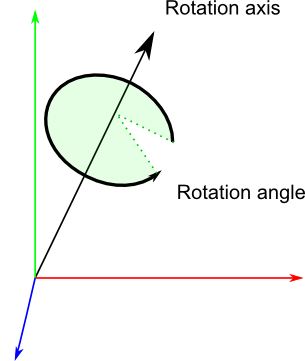

Rotation matrices

These are quite complicated. I’ll skip the details here, as it’s not important to know their exact layout for everyday use. For more information, please have a look to the Matrices and Quaternions FAQ (popular resource, probably available in your language as well). You can also have a look at the Rotations tutorials.

In C++:

// Use #include <glm/gtc/matrix_transform.hpp> and #include <glm/gtx/transform.hpp>

glm::vec3 myRotationAxis(??,??,??);

glm::rotate( angle_in_degrees, myRotationAxis );

Cumulating transformations

So now we know how to rotate, translate, and scale our vectors. It would be great to combine these transformations. This is done by multiplying the matrices together, for instance:

TransformedVector = TranslationMatrix * RotationMatrix * ScaleMatrix * OriginalVector;

!!! BEWARE!!! This lines actually performs the scaling FIRST, and THEN the rotation, and THEN the translation. This is how matrix multiplication works.

Writing the operations in another order wouldn’t produce the same result. Try it yourself:

-

make one step ahead (beware of your computer) and turn left;

-

turn left, and make one step ahead

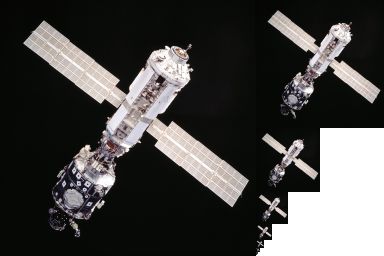

As a matter of fact, the order above is what you will usually need for game characters and other items: Scale it first if needed; then set its direction, then translate it. For instance, given a ship model (rotations have been removed for simplification):

- The wrong way:

- You translate the ship by (10,0,0). Its center is now at 10 units of the origin.

- You scale your ship by 2. Every coordinate is multiplied by 2 relative to the origin, which is far away… So you end up with a big ship, but centered at 2*10 = 20. Which you don’t want.

- The right way:

- You scale your ship by 2. You get a big ship, centered on the origin.

- You translate your ship. It’s still the same size, and at the right distance.

Matrix-matrix multiplication is very similar to matrix-vector multiplication, so I’ll once again skip some details and redirect you the the Matrices and Quaternions FAQ if needed. For now, we’ll simply ask the computer to do it:

in C++, with GLM:

glm::mat4 myModelMatrix = myTranslationMatrix * myRotationMatrix * myScaleMatrix;

glm::vec4 myTransformedVector = myModelMatrix * myOriginalVector;

in GLSL:

mat4 transform = mat2 * mat1;

vec4 out_vec = transform * in_vec;

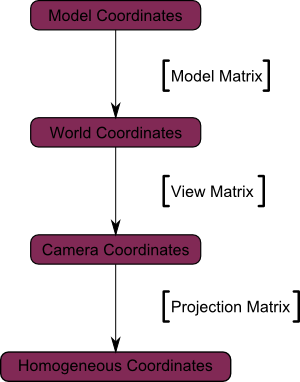

The Model, View and Projection matrices

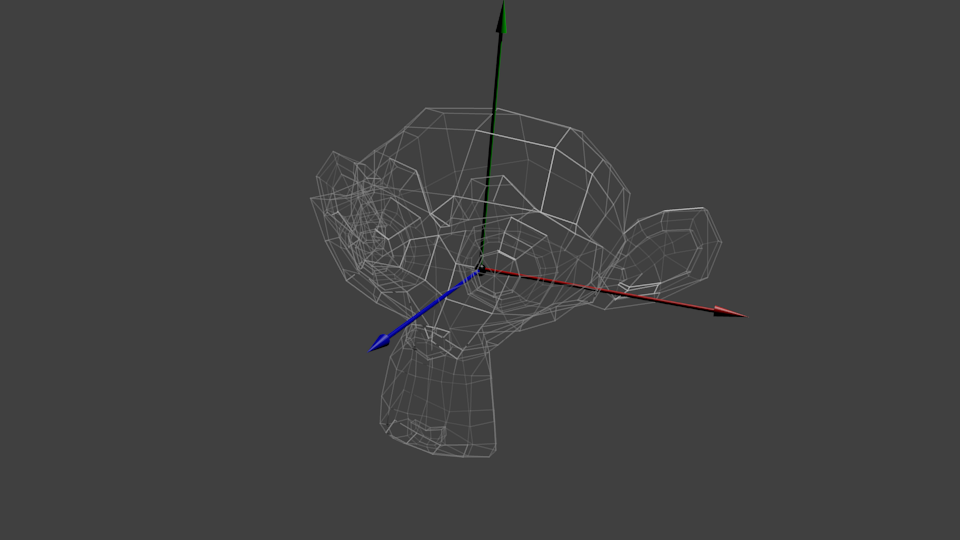

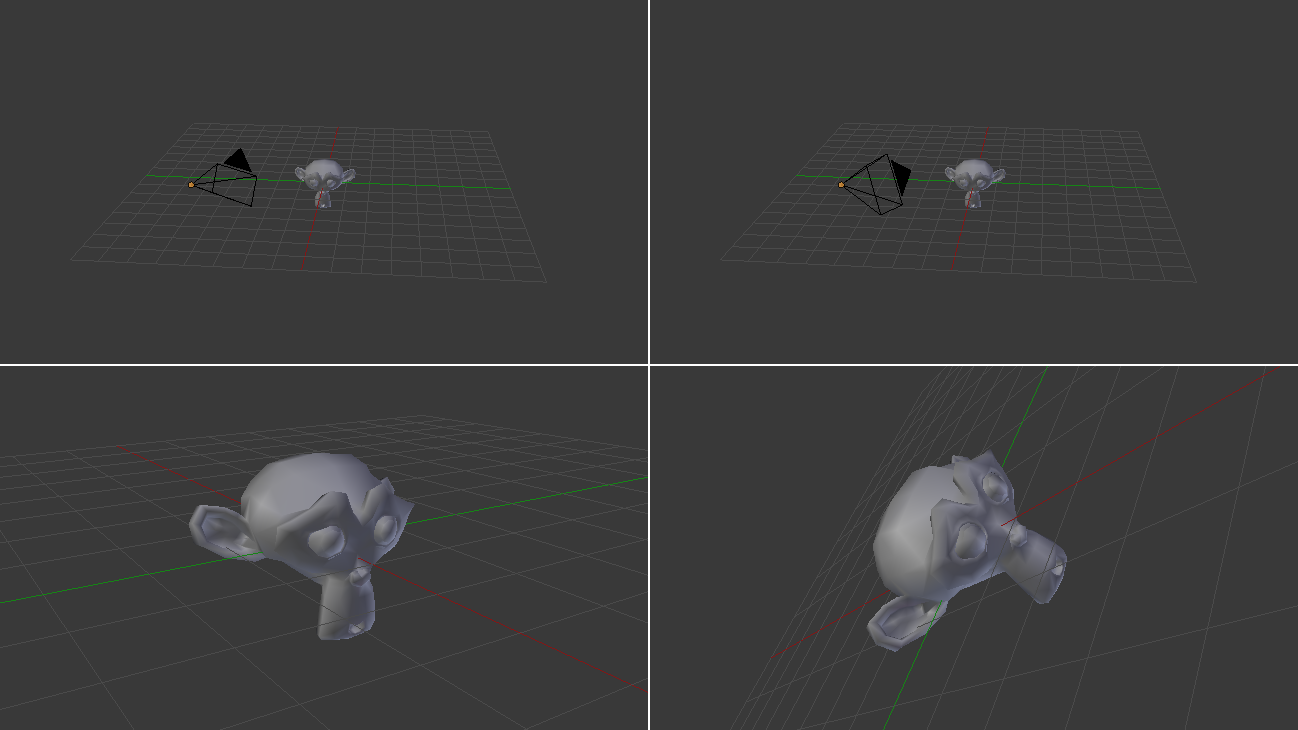

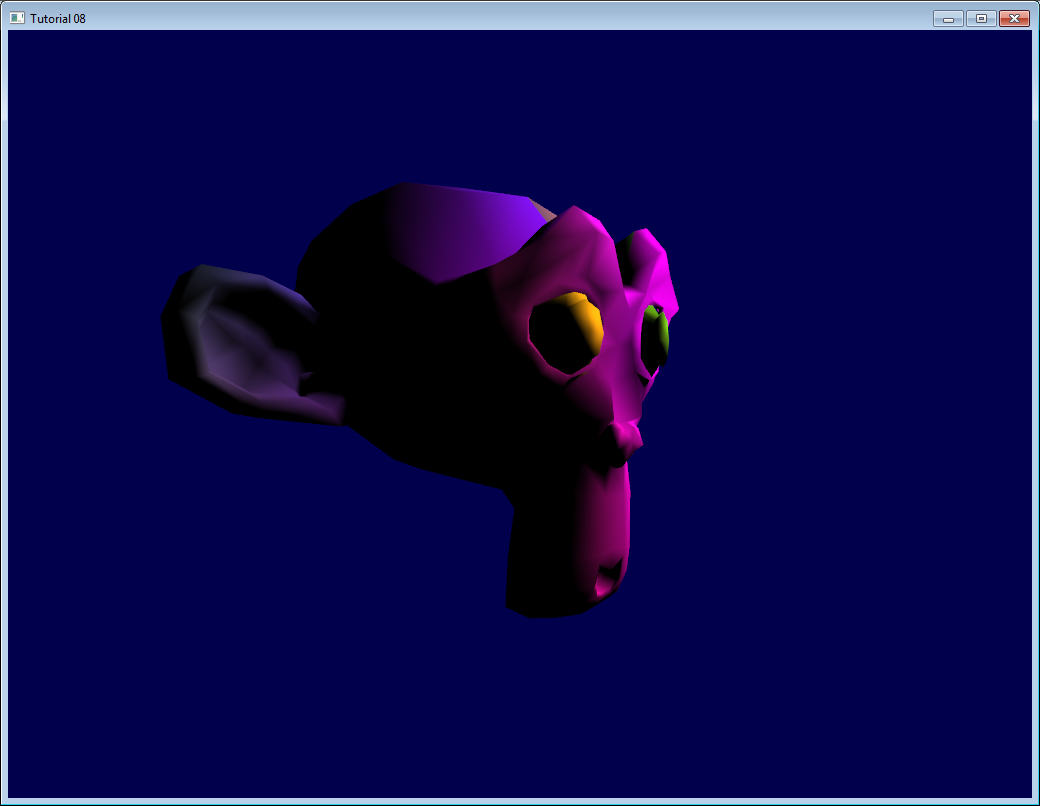

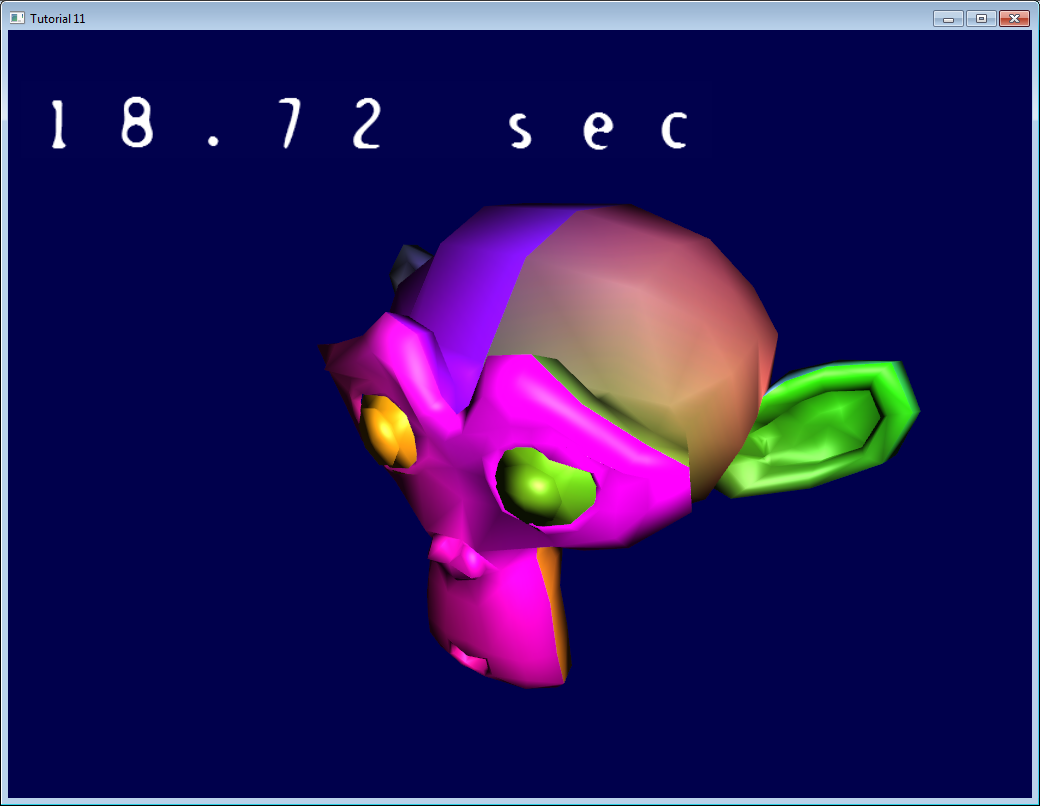

For the rest of this tutorial, we will suppose that we know how to draw Blender’s favourite 3d model: the monkey Suzanne.

The Model, View and Projection matrices are a handy tool to separate transformations cleanly. You may not use this (after all, that’s what we did in tutorials 1 and 2). But you should. This is the way everybody does, because it’s easier this way.

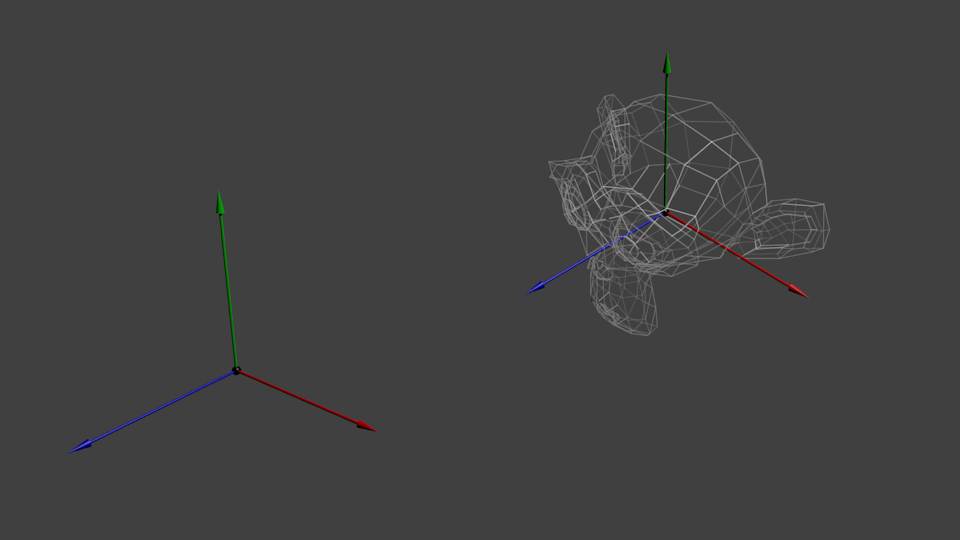

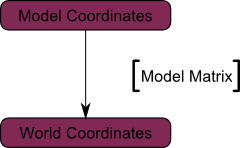

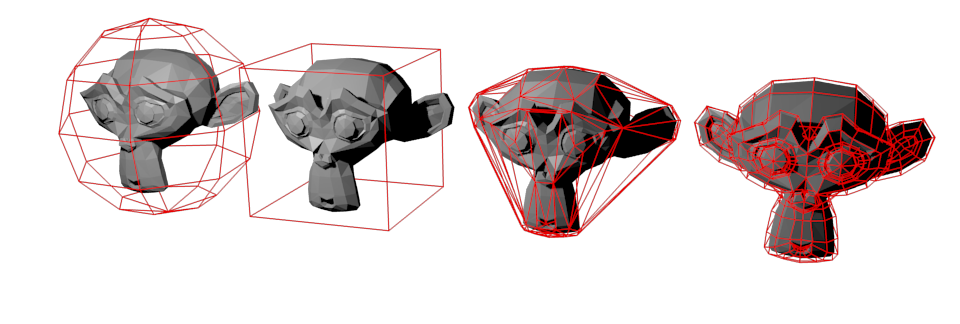

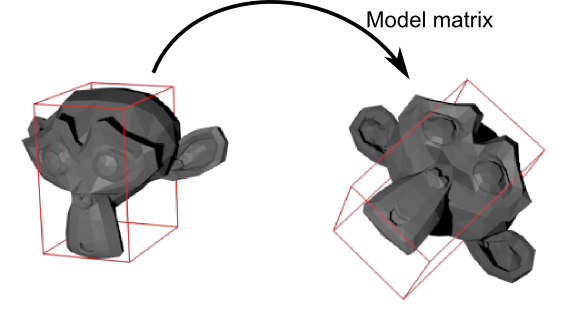

The Model matrix

This model, just as our beloved red triangle, is defined by a set of vertices. The X,Y,Z coordinates of these vertices are defined relative to the object’s center: that is, if a vertex is at (0,0,0), it is at the center of the object.

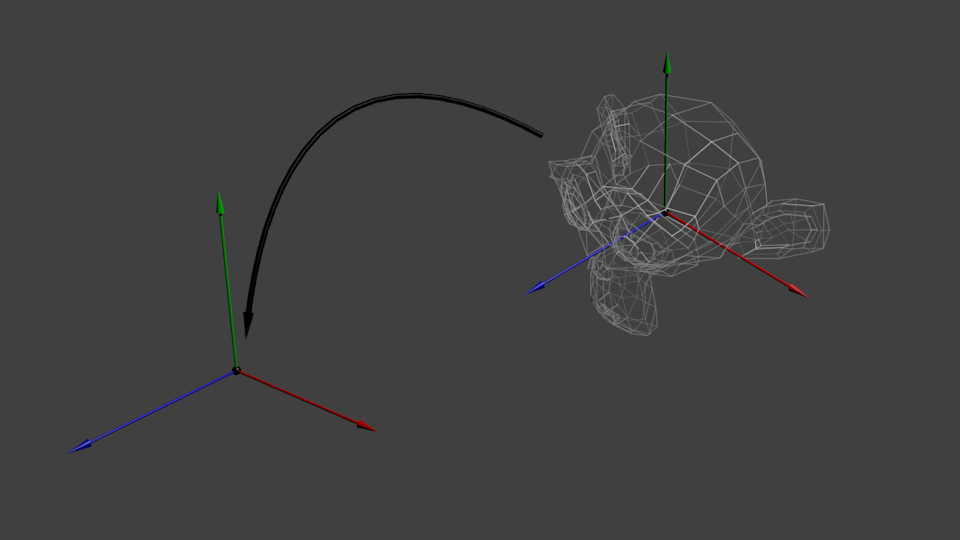

We’d like to be able to move this model, maybe because the player controls it with the keyboard and the mouse. Easy, you just learnt do do so: translation\*rotation\*scale, and done. You apply this matrix to all your vertices at each frame (in GLSL, not in C++!) and everything moves. Something that doesn’t move will be at the center of the world.

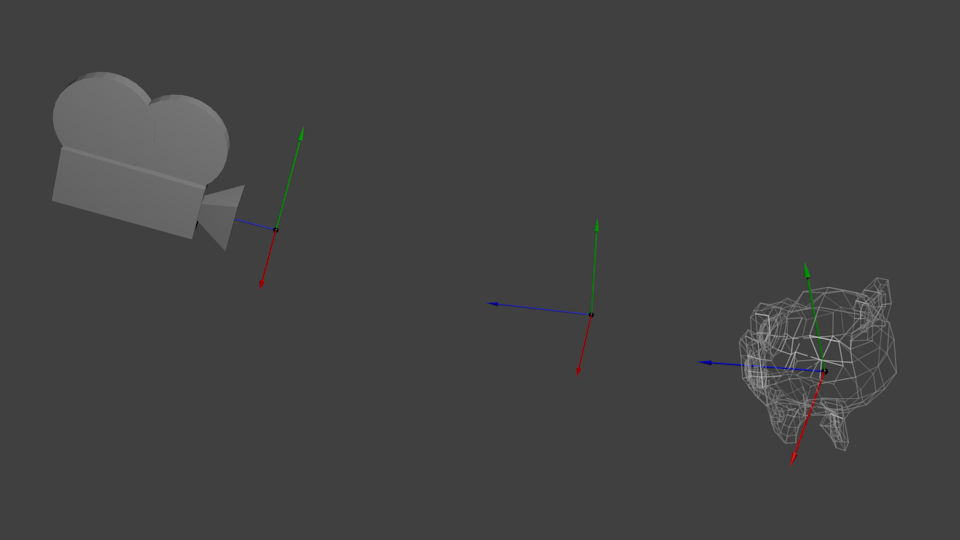

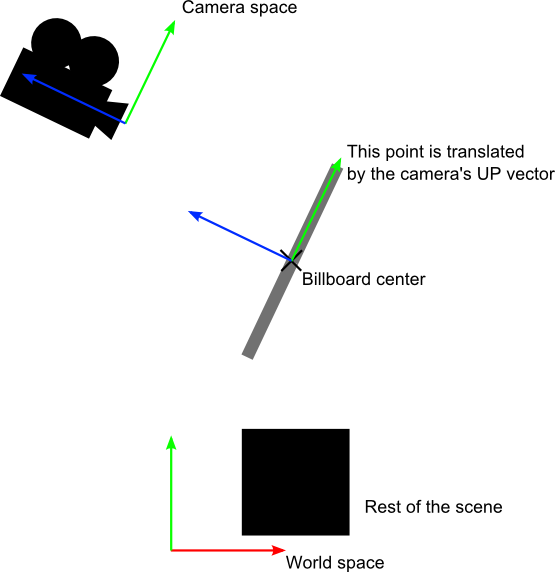

Your vertices are now in World Space. This is the meaning of the black arrow in the image below: We went from Model Space (all vertices defined relatively to the center of the model) to World Space (all vertices defined relatively to the center of the world).

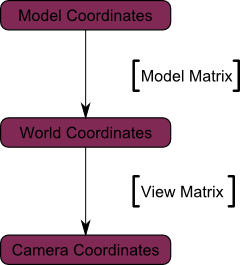

We can sum this up with the following diagram:

The View matrix

Let’s quote Futurama again:

The engines don’t move the ship at all. The ship stays where it is and the engines move the universe around it.

When you think about it, the same applies to cameras. It you want to view a moutain from another angle, you can either move the camera…or move the mountain. While not practical in real life, this is really simple and handy in Computer Graphics.

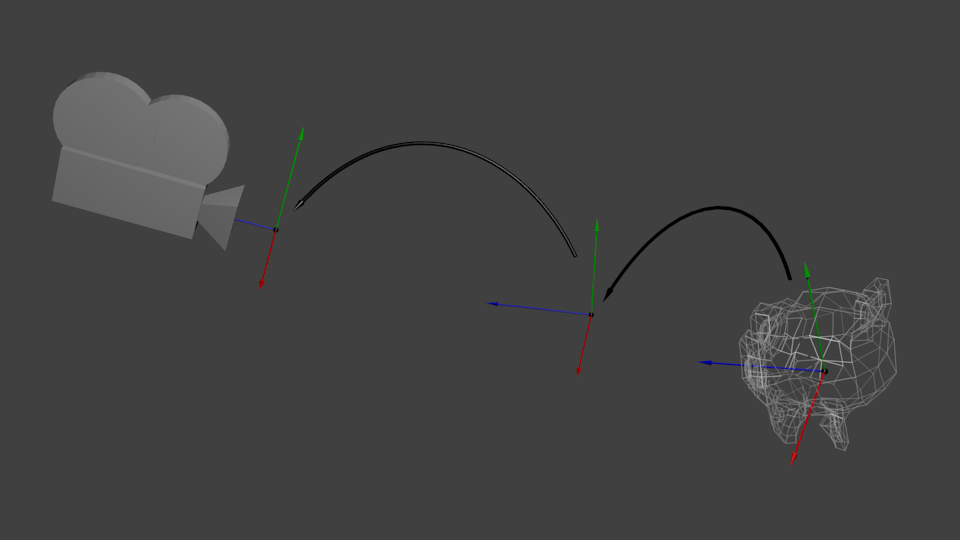

So initially your camera is at the origin of the World Space. In order to move the world, you simply introduce another matrix. Let’s say you want to move your camera of 3 units to the right (+X). This is equivalent to moving your whole world (meshes included) 3 units to the LEFT! (-X). While you brain melts, let’s do it:

// Use #include <glm/gtc/matrix_transform.hpp> and #include <glm/gtx/transform.hpp>

glm::mat4 ViewMatrix = glm::translate(glm::mat4(), glm::vec3(-3.0f, 0.0f ,0.0f));

Again, the image below illustrates this: We went from World Space (all vertices defined relatively to the center of the world, as we made so in the previous section) to Camera Space (all vertices defined relatively to the camera).

Before you head explodes from this, enjoy GLM’s great glm::lookAt function:

glm::mat4 CameraMatrix = glm::lookAt(

cameraPosition, // the position of your camera, in world space

cameraTarget, // where you want to look at, in world space

upVector // probably glm::vec3(0,1,0), but (0,-1,0) would make you looking upside-down, which can be great too

);

Here’s the compulsory diagram:

This is not over yet, though.

The Projection matrix

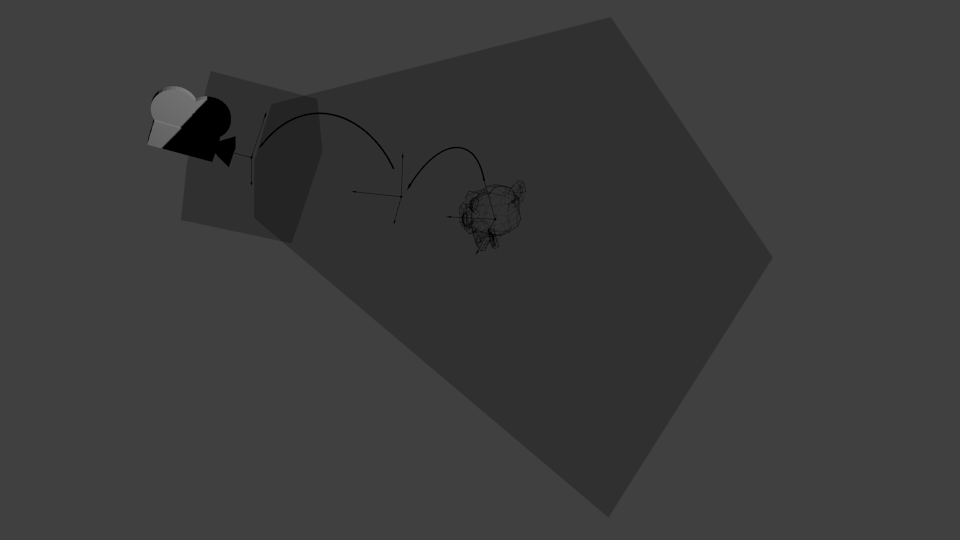

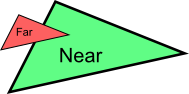

We’re now in Camera Space. This means that after all theses transformations, a vertex that happens to have x==0 and y==0 should be rendered at the center of the screen. But we can’t use only the x and y coordinates to determine where an object should be put on the screen: its distance to the camera (z) counts, too! For two vertices with similar x and y coordinates, the vertex with the biggest z coordinate will be more on the center of the screen than the other.

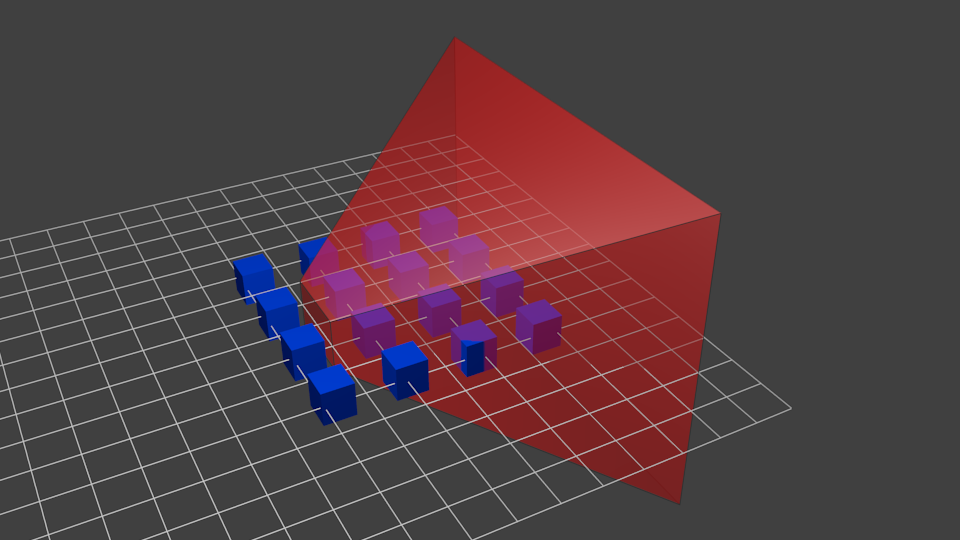

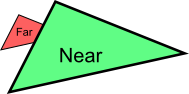

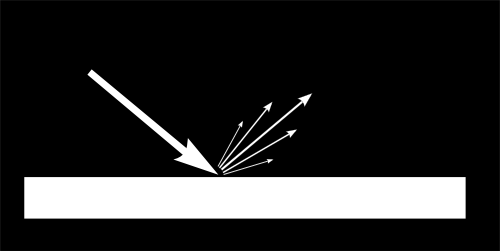

This is called a perspective projection:

And luckily for us, a 4x4 matrix can represent this projection1:

// Generates a really hard-to-read matrix, but a normal, standard 4x4 matrix nonetheless

glm::mat4 projectionMatrix = glm::perspective(

glm::radians(FoV), // The vertical Field of View, in radians: the amount of "zoom". Think "camera lens". Usually between 90° (extra wide) and 30° (quite zoomed in)

4.0f / 3.0f, // Aspect Ratio. Depends on the size of your window. Notice that 4/3 == 800/600 == 1280/960, sounds familiar?

0.1f, // Near clipping plane. Keep as big as possible, or you'll get precision issues.

100.0f // Far clipping plane. Keep as little as possible.

);

One last time:

We went from Camera Space (all vertices defined relatively to the camera) to Homogeneous Space (all vertices defined in a small cube. Everything inside the cube is onscreen).

And the final diagram:

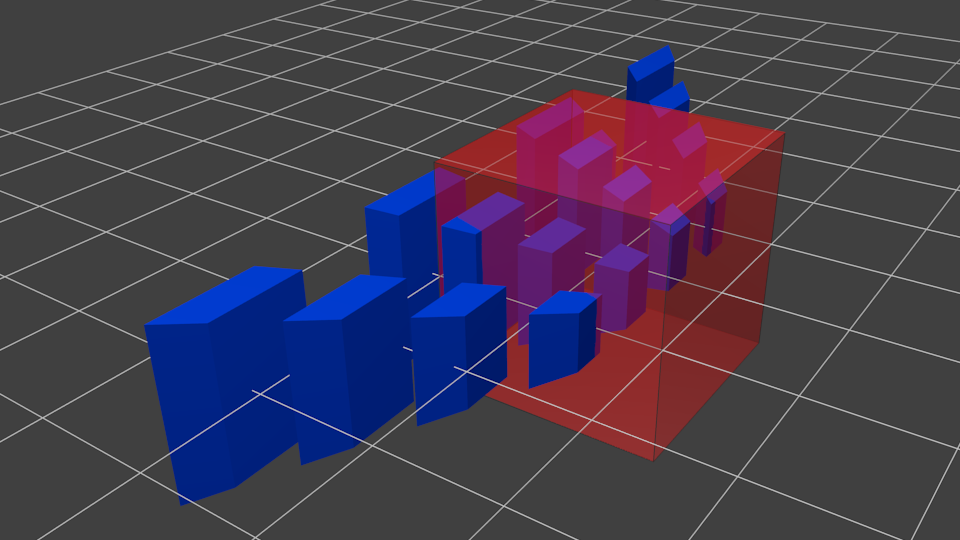

Here’s another diagram so that you understand better what happens with this Projection stuff. Before projection, we’ve got our blue objects, in Camera Space, and the red shape represents the frustum of the camera: the part of the scene that the camera is actually able to see.

Multiplying everything by the Projection Matrix has the following effect:

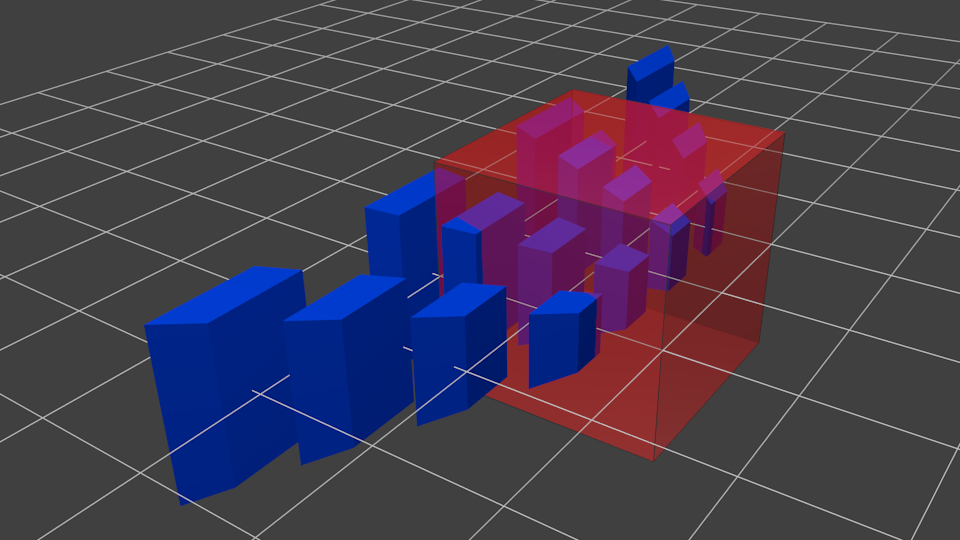

In this image, the frustum is now a perfect cube (between -1 and 1 on all axes, it’s a little bit hard to see it), and all blue objects have been deformed in the same way. Thus, the objects that are near the camera ( = near the face of the cube that we can’t see) are big, the others are smaller. Seems like real life!

Let’s see what it looks like from the “behind” the frustum:

Here you get your image! It’s just a little bit too square, so another mathematical transformation is applied (this one is automatic, you don’t have to do it yourself in the shader) to fit this to the actual window size:

And this is the image that is actually rendered!

Cumulating transformations: the ModelViewProjection matrix

…Just a standard matrix multiplication as you already love them!

// C++: compute the matrix

glm::mat4 MVPmatrix = projection * view * model; // Remember: inverted!

// GLSL: apply it

transformed_vertex = MVP * in_vertex;

Putting it all together

- First step: include the GLM GTC matrix transform functions:

#include <glm/gtc/matrix_transform.hpp>

-

Second step: generating our MVP matrix. This must be done for each model you render.

// Projection matrix: 45° Field of View, 4:3 ratio, display range: 0.1 unit <-> 100 units glm::mat4 Projection = glm::perspective(glm::radians(45.0f), (float) width / (float)height, 0.1f, 100.0f); // Or, for an ortho camera: //glm::mat4 Projection = glm::ortho(-10.0f,10.0f,-10.0f,10.0f,0.0f,100.0f); // In world coordinates // Camera matrix glm::mat4 View = glm::lookAt( glm::vec3(4,3,3), // Camera is at (4,3,3), in World Space glm::vec3(0,0,0), // and looks at the origin glm::vec3(0,1,0) // Head is up (set to 0,-1,0 to look upside-down) ); // Model matrix: an identity matrix (model will be at the origin) glm::mat4 Model = glm::mat4(1.0f); // Our ModelViewProjection: multiplication of our 3 matrices glm::mat4 mvp = Projection * View * Model; // Remember, matrix multiplication is the other way around -

Third step: give it to GLSL

// Get a handle for our "MVP" uniform // Only during the initialisation GLuint MatrixID = glGetUniformLocation(programID, "MVP"); // Send our transformation to the currently bound shader, in the "MVP" uniform // This is done in the main loop since each model will have a different MVP matrix (At least for the M part) glUniformMatrix4fv(MatrixID, 1, GL_FALSE, &mvp[0][0]); -

Fourth step: use it in GLSL to transform our vertices in

SimpleVertexShader.vertexshader// Input vertex data, different for all executions of this shader. layout(location = 0) in vec3 vertexPosition_modelspace; // Values that stay constant for the whole mesh. uniform mat4 MVP; void main(){ // Output position of the vertex, in clip space: MVP * position gl_Position = MVP * vec4(vertexPosition_modelspace,1); } -

Done! Here is the same triangle as in tutorial 2, still at the origin (0,0,0), but viewed in perspective from point (4,3,3), heads up (0,1,0), with a 45° field of view.

In tutorial 6 you’ll learn how to modify these values dynamically using the keyboard and the mouse to create a game-like camera, but first, we’ll learn how to give our 3D models some colour (tutorial 4) and textures (tutorial 5).

Exercises

- Try changing the glm::perspective

- Instead of using a perspective projection, use an orthographic projection (glm::ortho)

- Modify ModelMatrix to translate, rotate, then scale the triangle

- Do the same thing, but in different orders. What do you notice? What is the “best” order that you would want to use for a character?

Addendum

-

[…]luckily for us, a 4x4 matrix can represent this projection: Actually, this is not correct. A perspective transformation is not affine, and as such, can’t be represented entirely by a matrix. After beeing multiplied by the ProjectionMatrix, homogeneous coordinates are divided by their own W component. This W component happens to be -Z (because the projection matrix has been crafted this way). This way, points that are far away from the origin are divided by a big Z; their X and Y coordinates become smaller; points become more close to each other, objects seem smaller; and this is what gives the perspective. This transformation is done in hardware, and is not visible in the shader. ↩

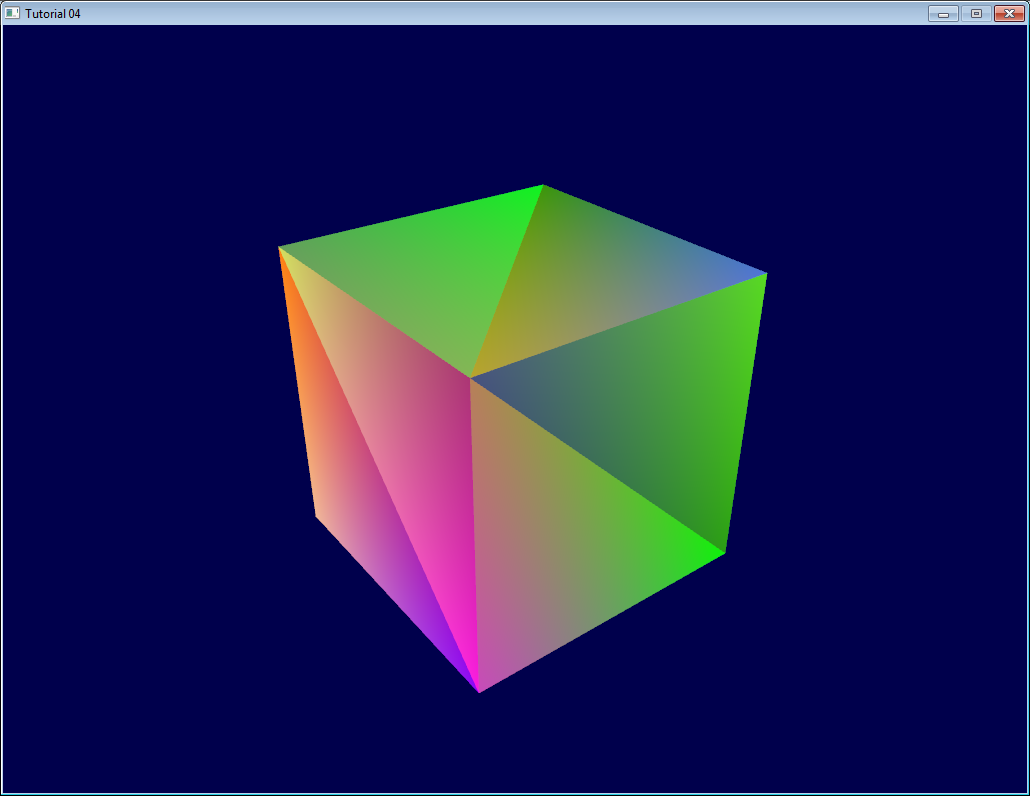

Tutorial 4 : A Colored Cube

Welcome for the 4rth tutorial ! You will do the following :

- Draw a cube instead of the boring triangle

- Add some fancy colors

- Learn what the Z-Buffer is

Draw a cube

A cube has six square faces. Since OpenGL only knows about triangles, we’ll have to draw 12 triangles : two for each face. We just define our vertices in the same way as we did for the triangle.

// Our vertices. Three consecutive floats give a 3D vertex; Three consecutive vertices give a triangle.

// A cube has 6 faces with 2 triangles each, so this makes 6*2=12 triangles, and 12*3 vertices

static const GLfloat g_vertex_buffer_data[] = {

-1.0f,-1.0f,-1.0f, // triangle 1 : begin

-1.0f,-1.0f, 1.0f,

-1.0f, 1.0f, 1.0f, // triangle 1 : end

1.0f, 1.0f,-1.0f, // triangle 2 : begin

-1.0f,-1.0f,-1.0f,

-1.0f, 1.0f,-1.0f, // triangle 2 : end

1.0f,-1.0f, 1.0f,

-1.0f,-1.0f,-1.0f,

1.0f,-1.0f,-1.0f,

1.0f, 1.0f,-1.0f,

1.0f,-1.0f,-1.0f,

-1.0f,-1.0f,-1.0f,

-1.0f,-1.0f,-1.0f,

-1.0f, 1.0f, 1.0f,

-1.0f, 1.0f,-1.0f,

1.0f,-1.0f, 1.0f,

-1.0f,-1.0f, 1.0f,

-1.0f,-1.0f,-1.0f,

-1.0f, 1.0f, 1.0f,

-1.0f,-1.0f, 1.0f,

1.0f,-1.0f, 1.0f,

1.0f, 1.0f, 1.0f,

1.0f,-1.0f,-1.0f,

1.0f, 1.0f,-1.0f,

1.0f,-1.0f,-1.0f,

1.0f, 1.0f, 1.0f,

1.0f,-1.0f, 1.0f,

1.0f, 1.0f, 1.0f,

1.0f, 1.0f,-1.0f,

-1.0f, 1.0f,-1.0f,

1.0f, 1.0f, 1.0f,

-1.0f, 1.0f,-1.0f,

-1.0f, 1.0f, 1.0f,

1.0f, 1.0f, 1.0f,

-1.0f, 1.0f, 1.0f,

1.0f,-1.0f, 1.0f

};

The OpenGL buffer is created, bound, filled and configured with the standard functions (glGenBuffers, glBindBuffer, glBufferData, glVertexAttribPointer) ; see Tutorial 2 for a quick reminder. The draw call does not change either, you just have to set the right number of vertices that must be drawn :

// Draw the triangle !

glDrawArrays(GL_TRIANGLES, 0, 12*3); // 12*3 indices starting at 0 -> 12 triangles -> 6 squares

A few remarks on this code :

- For now, our 3D model is fixed : in order to change it, you have to modify the source code, recompile the application, and hope for the best. We’ll learn how to load dynamic models in tutorial 7.

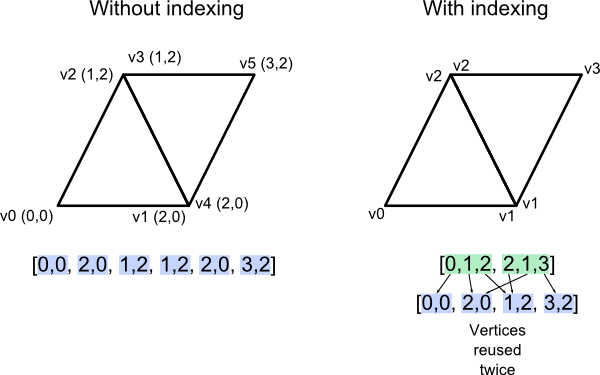

- Each vertex is actually written at least 3 times (search “-1.0f,-1.0f,-1.0f” in the code above). This is an awful waste of memory. We’ll learn how to deal with this in tutorial 9.

You now have all the needed pieces to draw the cube in white. Make the shaders work ! go on, at least try :)

Adding colors

A color is, conceptually, exactly the same as a position : it’s just data. In OpenGL terms, they are “attributes”. As a matter of fact, we already used this with glEnableVertexAttribArray() and glVertexAttribPointer(). Let’s add another attribute. The code is going to be very similar.

First, declare your colors : one RGB triplet per vertex. Here I generated some randomly, so the result won’t look that good, but you can do something better, for instance by copying the vertex’s position into its own color.

// One color for each vertex. They were generated randomly.

static const GLfloat g_color_buffer_data[] = {

0.583f, 0.771f, 0.014f,

0.609f, 0.115f, 0.436f,

0.327f, 0.483f, 0.844f,

0.822f, 0.569f, 0.201f,

0.435f, 0.602f, 0.223f,

0.310f, 0.747f, 0.185f,

0.597f, 0.770f, 0.761f,

0.559f, 0.436f, 0.730f,

0.359f, 0.583f, 0.152f,

0.483f, 0.596f, 0.789f,

0.559f, 0.861f, 0.639f,

0.195f, 0.548f, 0.859f,

0.014f, 0.184f, 0.576f,

0.771f, 0.328f, 0.970f,

0.406f, 0.615f, 0.116f,

0.676f, 0.977f, 0.133f,

0.971f, 0.572f, 0.833f,

0.140f, 0.616f, 0.489f,

0.997f, 0.513f, 0.064f,

0.945f, 0.719f, 0.592f,

0.543f, 0.021f, 0.978f,

0.279f, 0.317f, 0.505f,

0.167f, 0.620f, 0.077f,

0.347f, 0.857f, 0.137f,

0.055f, 0.953f, 0.042f,

0.714f, 0.505f, 0.345f,

0.783f, 0.290f, 0.734f,

0.722f, 0.645f, 0.174f,

0.302f, 0.455f, 0.848f,

0.225f, 0.587f, 0.040f,

0.517f, 0.713f, 0.338f,

0.053f, 0.959f, 0.120f,

0.393f, 0.621f, 0.362f,

0.673f, 0.211f, 0.457f,

0.820f, 0.883f, 0.371f,

0.982f, 0.099f, 0.879f

};

The buffer is created, bound and filled in the exact same way as the previous one :

GLuint colorbuffer;

glGenBuffers(1, &colorbuffer);

glBindBuffer(GL_ARRAY_BUFFER, colorbuffer);

glBufferData(GL_ARRAY_BUFFER, sizeof(g_color_buffer_data), g_color_buffer_data, GL_STATIC_DRAW);

The configuration is also identical :

// 2nd attribute buffer : colors

glEnableVertexAttribArray(1);

glBindBuffer(GL_ARRAY_BUFFER, colorbuffer);

glVertexAttribPointer(

1, // attribute. No particular reason for 1, but must match the layout in the shader.

3, // size

GL_FLOAT, // type

GL_FALSE, // normalized?

0, // stride

(void*)0 // array buffer offset

);

Now, in the vertex shader, we have access to this additional buffer :

// Notice that the "1" here equals the "1" in glVertexAttribPointer

layout(location = 1) in vec3 vertexColor;

In our case, we won’t do anything fancy with it in the vertex shader. We will simply forward it to the fragment shader :

// Output data ; will be interpolated for each fragment.

out vec3 fragmentColor;

void main(){

[...]

// The color of each vertex will be interpolated

// to produce the color of each fragment

fragmentColor = vertexColor;

}

In the fragment shader, you declare fragmentColor again :

// Interpolated values from the vertex shaders

in vec3 fragmentColor;

… and copy it in the final output color :

// Ouput data

out vec3 color;

void main(){

// Output color = color specified in the vertex shader,

// interpolated between all 3 surrounding vertices

color = fragmentColor;

}

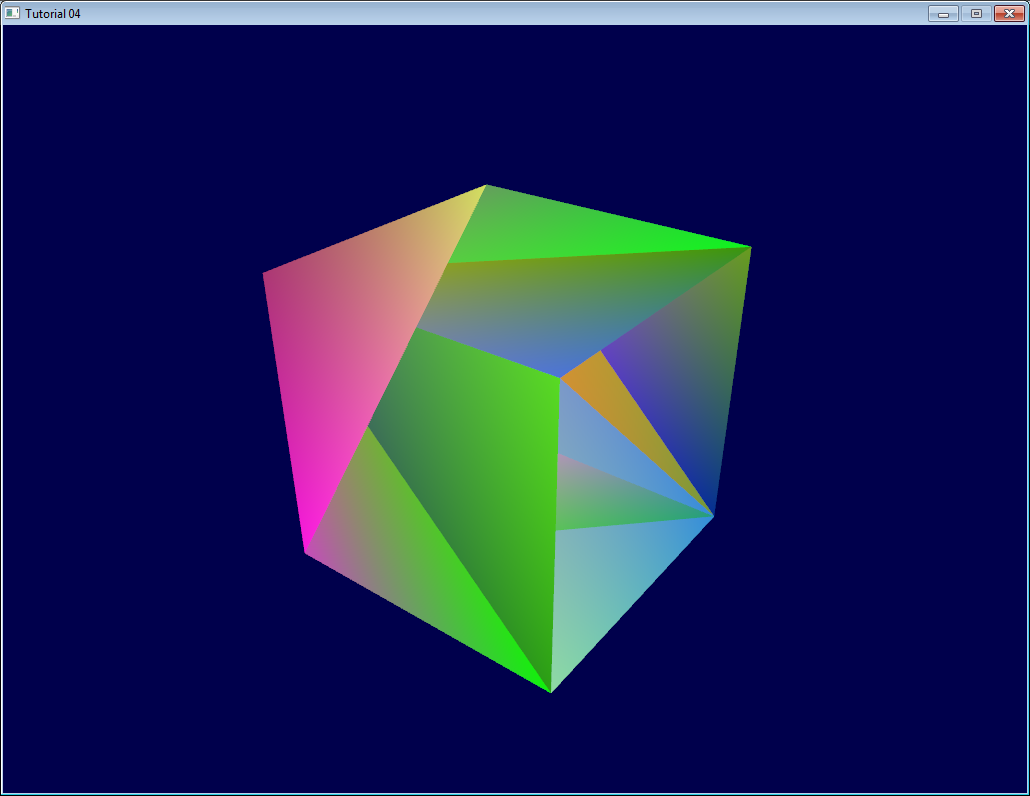

And that’s what we get :

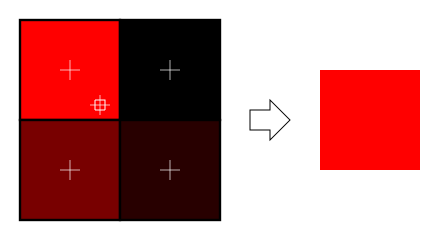

Urgh. Ugly. To understand what happens, here’s what happens when you draw a “far” triangle and a “near” triangle :

Seems OK. Now draw the “far” triangle last :

It overdraws the “near” one, even though it’s supposed to be behind it ! This is what happens with our cube : some faces are supposed to be hidden, but since they are drawn last, they are visible. Let’s call the Z-Buffer to the rescue !

Quick Note 1 : If you don’t see the problem, change your camera position to (4,3,-3)

Quick Note 2 : if “color is like position, it’s an attribute”, why do we need to declare out vec3 fragmentColor; and in vec3 fragmentColor; for the color, and not for the position ? Because the position is actually a bit special : It’s the only thing that is compulsory (or OpenGL wouldn’t know where to draw the triangle !). So in the vertex shader, gl_Position is a “built-in” variable.

The Z-Buffer

The solution to this problem is to store the depth (i.e. “Z”) component of each fragment in a buffer, and each and every time you want to write a fragment, you first check if you should (i.e the new fragment is closer than the previous one).

You can do this yourself, but it’s so much simpler to just ask the hardware to do it itself :

// Enable depth test

glEnable(GL_DEPTH_TEST);

// Accept fragment if it closer to the camera than the former one

glDepthFunc(GL_LESS);

You also need to clear the depth each frame, instead of only the color :

// Clear the screen

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

And this is enough to solve all your problems.

Exercises

-

Draw the cube AND the triangle, at different locations. You will need to generate 2 MVP matrices, to make 2 draw calls in the main loop, but only 1 shader is required.

-

Generate the color values yourself. Some ideas : At random, so that colors change at each run; Depending on the position of the vertex; a mix of the two; Some other creative idea :) In case you don’t know C, here’s the syntax :

static GLfloat g_color_buffer_data[12*3*3];

for (int v = 0; v < 12*3 ; v++){

g_color_buffer_data[3*v+0] = your red color here;

g_color_buffer_data[3*v+1] = your green color here;

g_color_buffer_data[3*v+2] = your blue color here;

}

- Once you’ve done that, make the colors change each frame. You’ll have to call glBufferData each frame. Make sure the appropriate buffer is bound (glBindBuffer) before !

Tutorial 5 : A Textured Cube

- About UV coordinates

- Loading .BMP images yourself

- Using the texture in OpenGL

- What is filtering and mipmapping, and how to use them

- How to load texture with GLFW

- Compressed Textures

- Conclusion

- Exercices

- References

In this tutorial, you will learn :

- What are UV coordinates

- How to load textures yourself

- How to use them in OpenGL

- What is filtering and mipmapping, and how to use them

- How to load texture more robustly with GLFW

- What the alpha channel is

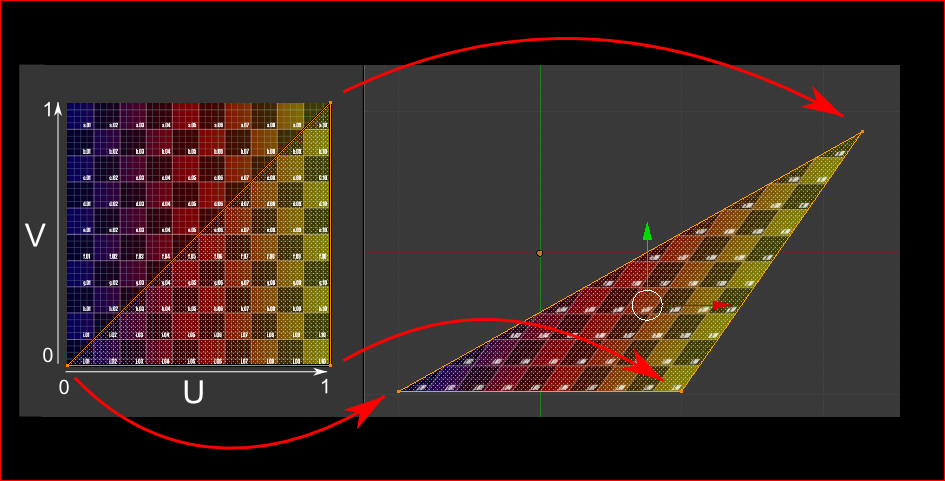

About UV coordinates

When texturing a mesh, you need a way to tell to OpenGL which part of the image has to be used for each triangle. This is done with UV coordinates.

Each vertex can have, on top of its position, a couple of floats, U and V. These coordinates are used to access the texture, in the following way :

Notice how the texture is distorted on the triangle.

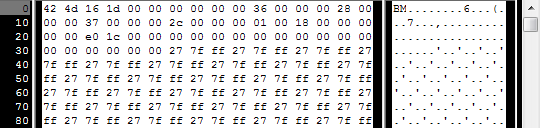

Loading .BMP images yourself

Knowing the BMP file format is not crucial : plenty of libraries can load BMP files for you. But it’s very simple and can help you understand how things work under the hood. So we’ll write a BMP file loader from scratch, so that you know how it works, and never use it again.

Here is the declaration of the loading function :

GLuint loadBMP_custom(const char * imagepath);

so it’s used like this :

GLuint image = loadBMP_custom("./my_texture.bmp");

Let’s see how to read a BMP file, then.

First, we’ll need some data. These variable will be set when reading the file.

// Data read from the header of the BMP file

unsigned char header[54]; // Each BMP file begins by a 54-bytes header

unsigned int dataPos; // Position in the file where the actual data begins

unsigned int width, height;

unsigned int imageSize; // = width*height*3

// Actual RGB data

unsigned char * data;

We now have to actually open the file

// Open the file

FILE * file = fopen(imagepath,"rb");

if (!file){printf("Image could not be opened\n"); return 0;}

The first thing in the file is a 54-bytes header. It contains information such as “Is this file really a BMP file?”, the size of the image, the number of bits per pixel, etc. So let’s read this header :

if ( fread(header, 1, 54, file)!=54 ){ // If not 54 bytes read : problem

printf("Not a correct BMP file\n");

return false;

}

The header always begins by BM. As a matter of fact, here’s what you get when you open a .BMP file in a hexadecimal editor :

So we have to check that the two first bytes are really ‘B’ and ‘M’ :

if ( header[0]!='B' || header[1]!='M' ){

printf("Not a correct BMP file\n");

return 0;

}

Now we can read the size of the image, the location of the data in the file, etc :

// Read ints from the byte array

dataPos = *(int*)&(header[0x0A]);

imageSize = *(int*)&(header[0x22]);

width = *(int*)&(header[0x12]);

height = *(int*)&(header[0x16]);

We have to make up some info if it’s missing :

// Some BMP files are misformatted, guess missing information

if (imageSize==0) imageSize=width*height*3; // 3 : one byte for each Red, Green and Blue component

if (dataPos==0) dataPos=54; // The BMP header is done that way

Now that we know the size of the image, we can allocate some memory to read the image into, and read :

// Create a buffer

data = new unsigned char [imageSize];

// Read the actual data from the file into the buffer

fread(data,1,imageSize,file);

//Everything is in memory now, the file can be closed

fclose(file);

We arrive now at the real OpenGL part. Creating textures is very similar to creating vertex buffers : Create a texture, bind it, fill it, and configure it.

In glTexImage2D, the GL_RGB indicates that we are talking about a 3-component color, and GL_BGR says how exactly it is represented in RAM. As a matter of fact, BMP does not store Red->Green->Blue but Blue->Green->Red, so we have to tell it to OpenGL.

// Create one OpenGL texture

GLuint textureID;

glGenTextures(1, &textureID);

// "Bind" the newly created texture : all future texture functions will modify this texture

glBindTexture(GL_TEXTURE_2D, textureID);

// Give the image to OpenGL

glTexImage2D(GL_TEXTURE_2D, 0,GL_RGB, width, height, 0, GL_BGR, GL_UNSIGNED_BYTE, data);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_NEAREST);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_NEAREST);

We’ll explain those last two lines later. Meanwhile, on the C++-side, you can use your new function to load a texture :

GLuint Texture = loadBMP_custom("uvtemplate.bmp");

Another very important point :** use power-of-two textures !**

- good : 128*128, 256*256, 1024*1024, 2*2…

- bad : 127*128, 3*5, …

- okay but weird : 128*256

Using the texture in OpenGL

We’ll have a look at the fragment shader first. Most of it is straightforward :

#version 330 core

// Interpolated values from the vertex shaders

in vec2 UV;

// Ouput data

out vec3 color;

// Values that stay constant for the whole mesh.

uniform sampler2D myTextureSampler;

void main(){

// Output color = color of the texture at the specified UV

color = texture( myTextureSampler, UV ).rgb;

}

Three things :

- The fragment shader needs UV coordinates. Seems fair.

- It also needs a “sampler2D” in order to know which texture to access (you can access several texture in the same shader)

- Finally, accessing a texture is done with texture(), which gives back a (R,G,B,A) vec4. We’ll see about the A shortly.

The vertex shader is simple too, you just have to pass the UVs to the fragment shader :

#version 330 core

// Input vertex data, different for all executions of this shader.

layout(location = 0) in vec3 vertexPosition_modelspace;

layout(location = 1) in vec2 vertexUV;

// Output data ; will be interpolated for each fragment.

out vec2 UV;

// Values that stay constant for the whole mesh.

uniform mat4 MVP;

void main(){

// Output position of the vertex, in clip space : MVP * position

gl_Position = MVP * vec4(vertexPosition_modelspace,1);

// UV of the vertex. No special space for this one.

UV = vertexUV;

}

Remember “layout(location = 1) in vec2 vertexUV” from Tutorial 4 ? Well, we’ll have to do the exact same thing here, but instead of giving a buffer (R,G,B) triplets, we’ll give a buffer of (U,V) pairs.

// Two UV coordinatesfor each vertex. They were created with Blender. You'll learn shortly how to do this yourself.

static const GLfloat g_uv_buffer_data[] = {

0.000059f, 1.0f-0.000004f,

0.000103f, 1.0f-0.336048f,

0.335973f, 1.0f-0.335903f,

1.000023f, 1.0f-0.000013f,

0.667979f, 1.0f-0.335851f,

0.999958f, 1.0f-0.336064f,

0.667979f, 1.0f-0.335851f,

0.336024f, 1.0f-0.671877f,

0.667969f, 1.0f-0.671889f,

1.000023f, 1.0f-0.000013f,

0.668104f, 1.0f-0.000013f,

0.667979f, 1.0f-0.335851f,

0.000059f, 1.0f-0.000004f,

0.335973f, 1.0f-0.335903f,

0.336098f, 1.0f-0.000071f,

0.667979f, 1.0f-0.335851f,

0.335973f, 1.0f-0.335903f,

0.336024f, 1.0f-0.671877f,

1.000004f, 1.0f-0.671847f,

0.999958f, 1.0f-0.336064f,

0.667979f, 1.0f-0.335851f,

0.668104f, 1.0f-0.000013f,

0.335973f, 1.0f-0.335903f,

0.667979f, 1.0f-0.335851f,

0.335973f, 1.0f-0.335903f,

0.668104f, 1.0f-0.000013f,

0.336098f, 1.0f-0.000071f,

0.000103f, 1.0f-0.336048f,

0.000004f, 1.0f-0.671870f,

0.336024f, 1.0f-0.671877f,

0.000103f, 1.0f-0.336048f,

0.336024f, 1.0f-0.671877f,

0.335973f, 1.0f-0.335903f,

0.667969f, 1.0f-0.671889f,

1.000004f, 1.0f-0.671847f,

0.667979f, 1.0f-0.335851f

};

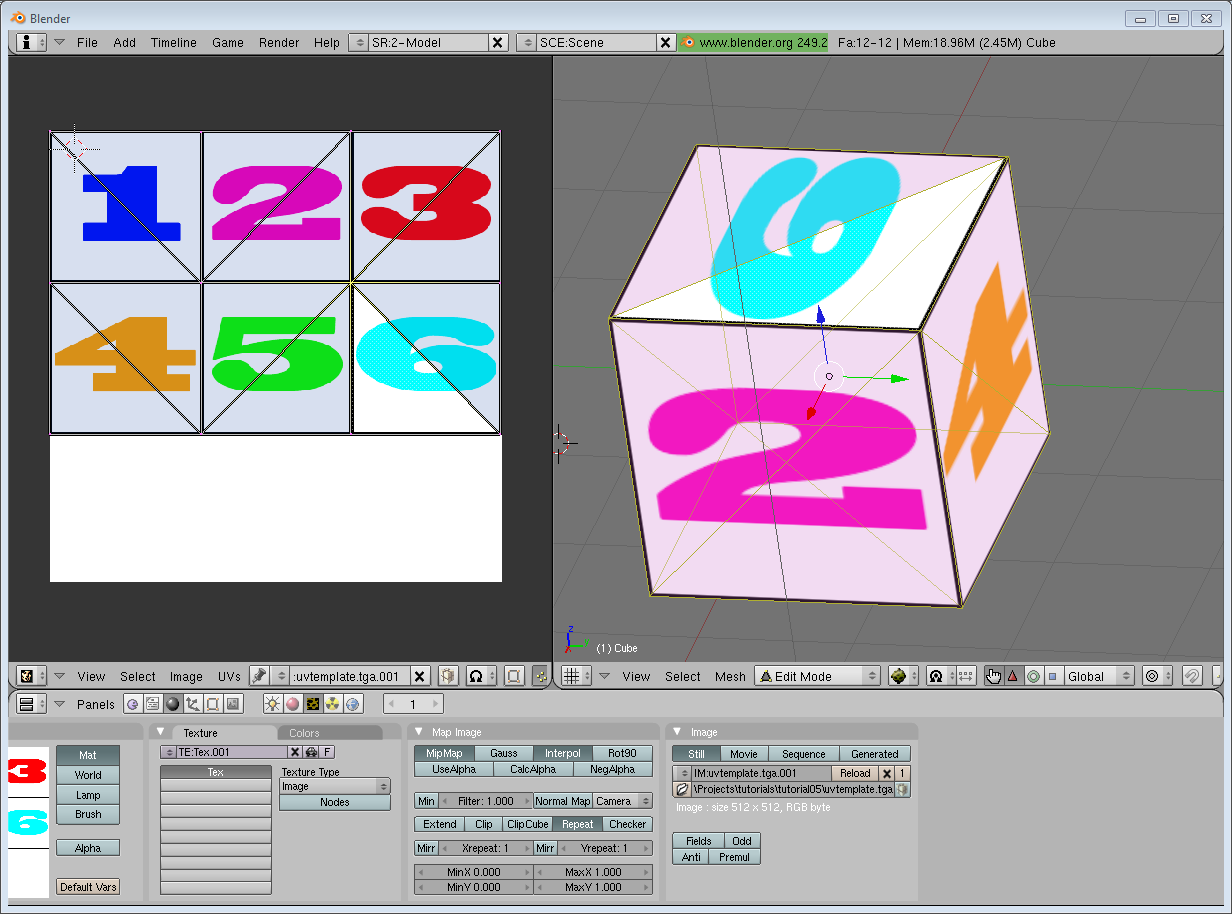

The UV coordinates above correspond to the following model :

The rest is obvious. Generate the buffer, bind it, fill it, configure it, and draw the Vertex Buffer as usual. Just be careful to use 2 as the second parameter (size) of glVertexAttribPointer instead of 3.

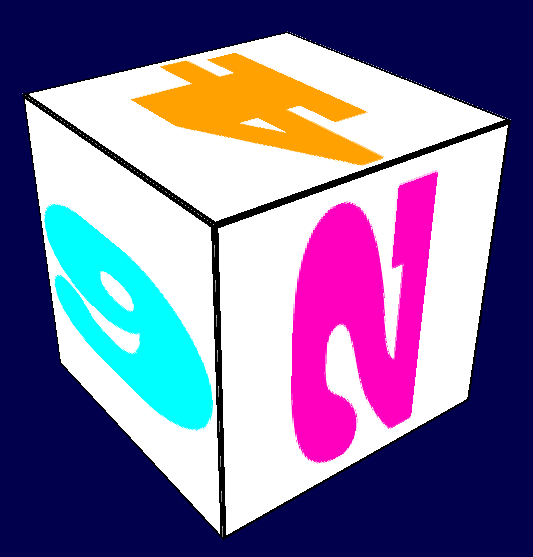

This is the result :

and a zoomed-in version :

What is filtering and mipmapping, and how to use them

As you can see in the screenshot above, the texture quality is not that great. This is because in loadBMP_custom, we wrote :

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_NEAREST);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_NEAREST);

This means that in our fragment shader, texture() takes the texel that is at the (U,V) coordinates, and continues happily.

There are several things we can do to improve this.

Linear filtering

With linear filtering, texture() also looks at the other texels around, and mixes the colours according to the distance to each center. This avoids the hard edges seen above.

This is much better, and this is used a lot, but if you want very high quality you can also use anisotropic filtering, which is a bit slower.

Anisotropic filtering

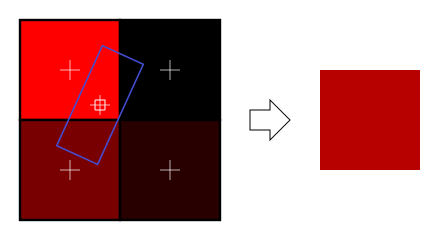

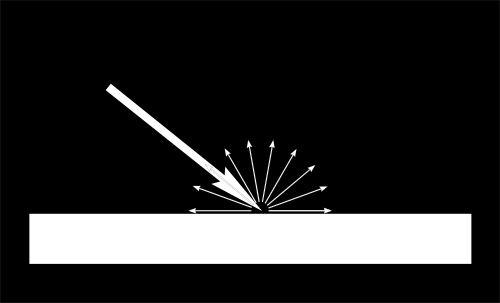

This one approximates the part of the image that is really seen through the fragment. For instance, if the following texture is seen from the side, and a little bit rotated, anisotropic filtering will compute the colour contained in the blue rectangle by taking a fixed number of samples (the “anisotropic level”) along its main direction.

Mipmaps

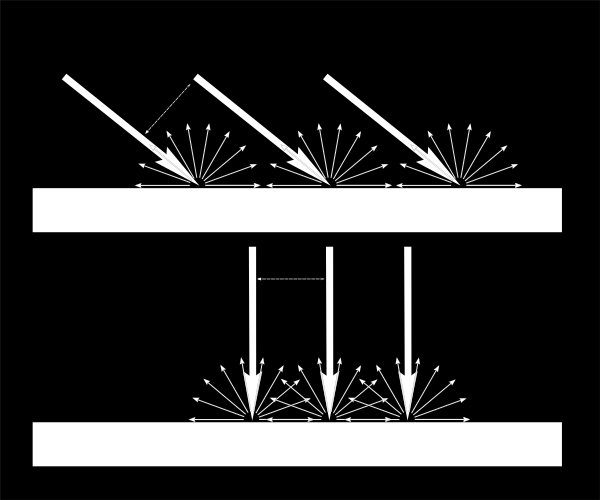

Both linear and anisotropic filtering have a problem. If the texture is seen from far away, mixing only 4 texels won’t be enough. Actually, if your 3D model is so far away than it takes only 1 fragment on screen, ALL the texels of the image should be averaged to produce the final color. This is obviously not done for performance reasons. Instead, we introduce MipMaps :

- At initialisation time, you scale down your image by 2, successively, until you only have a 1x1 image (which effectively is the average of all the texels in the image)

- When you draw a mesh, you select which mipmap is the more appropriate to use given how big the texel should be.

- You sample this mipmap with either nearest, linear or anisotropic filtering

- For additional quality, you can also sample two mipmaps and blend the results.

Luckily, all this is very simple to do, OpenGL does everything for us provided that you ask him nicely :

// When MAGnifying the image (no bigger mipmap available), use LINEAR filtering

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

// When MINifying the image, use a LINEAR blend of two mipmaps, each filtered LINEARLY too

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR_MIPMAP_LINEAR);

// Generate mipmaps, by the way.

glGenerateMipmap(GL_TEXTURE_2D);

How to load texture with GLFW

Our loadBMP_custom function is great because we made it ourselves, but using a dedicated library is better. GLFW2 can do that too (but only for TGA files, and this feature has been removed in GLFW3, that we now use) :

GLuint loadTGA_glfw(const char * imagepath){

// Create one OpenGL texture

GLuint textureID;

glGenTextures(1, &textureID);

// "Bind" the newly created texture : all future texture functions will modify this texture

glBindTexture(GL_TEXTURE_2D, textureID);

// Read the file, call glTexImage2D with the right parameters

glfwLoadTexture2D(imagepath, 0);

// Nice trilinear filtering.

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_REPEAT);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_REPEAT);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR_MIPMAP_LINEAR);

glGenerateMipmap(GL_TEXTURE_2D);

// Return the ID of the texture we just created

return textureID;

}

Compressed Textures

At this point, you’re probably wondering how to load JPEG files instead of TGA.

Short answer : don’t. GPUs can’t understand JPEG. So you’ll compress your original image in JPEG, and decompress it so that the GPU can understand it. You’re back to raw images, but you lost image quality while compressing to JPEG.

There’s a better option.

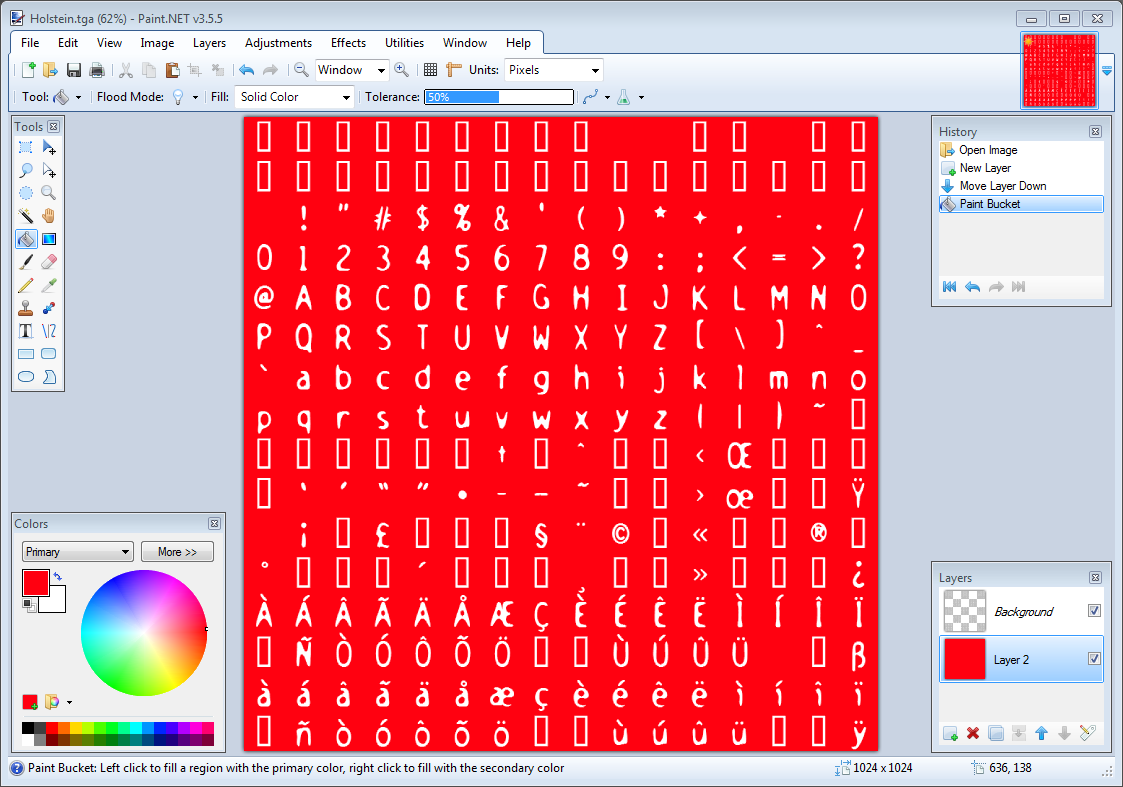

Creating compressed textures

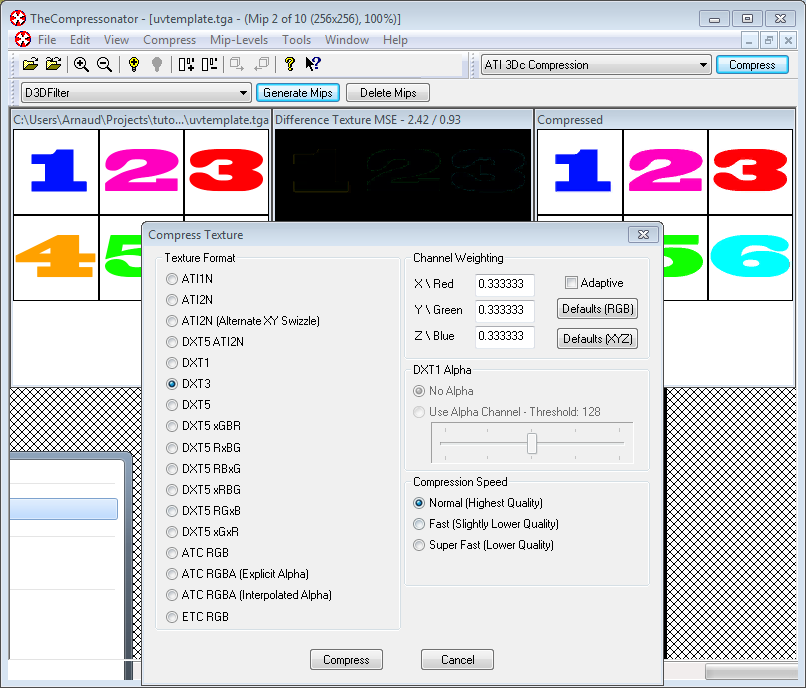

- Download The Compressonator, an AMD tool

- Load a Power-Of-Two texture in it

- Generate mipmaps so that you won’t have to do it on runtime

- Compress it in DXT1, DXT3 or in DXT5 (more about the differences between the various formats on Wikipedia) :

- Export it as a .DDS file.

At this point, your image is compressed in a format that is directly compatible with the GPU. Whenever calling texture() in a shader, it will uncompress it on-the-fly. This can seem slow, but since it takes a LOT less memory, less data needs to be transferred. But memory transfers are expensive; and texture decompression is free (there is dedicated hardware for that). Typically, using texture compression yields a 20% increase in performance. So you save on performance and memory, at the expense of reduced quality.

Using the compressed texture

Let’s see how to load the image. It’s very similar to the BMP code, except that the header is organized differently :

GLuint loadDDS(const char * imagepath){

unsigned char header[124];

FILE *fp;

/* try to open the file */

fp = fopen(imagepath, "rb");

if (fp == NULL)

return 0;

/* verify the type of file */

char filecode[4];

fread(filecode, 1, 4, fp);

if (strncmp(filecode, "DDS ", 4) != 0) {

fclose(fp);

return 0;

}

/* get the surface desc */

fread(&header, 124, 1, fp);

unsigned int height = *(unsigned int*)&(header[8 ]);

unsigned int width = *(unsigned int*)&(header[12]);

unsigned int linearSize = *(unsigned int*)&(header[16]);

unsigned int mipMapCount = *(unsigned int*)&(header[24]);

unsigned int fourCC = *(unsigned int*)&(header[80]);

After the header is the actual data : all the mipmap levels, successively. We can read them all in one batch :

unsigned char * buffer;

unsigned int bufsize;

/* how big is it going to be including all mipmaps? */

bufsize = mipMapCount > 1 ? linearSize * 2 : linearSize;

buffer = (unsigned char*)malloc(bufsize * sizeof(unsigned char));

fread(buffer, 1, bufsize, fp);

/* close the file pointer */

fclose(fp);

Here we’ll deal with 3 different formats : DXT1, DXT3 and DXT5. We need to convert the “fourCC” flag into a value that OpenGL understands.

unsigned int components = (fourCC == FOURCC_DXT1) ? 3 : 4;

unsigned int format;

switch(fourCC)

{

case FOURCC_DXT1:

format = GL_COMPRESSED_RGBA_S3TC_DXT1_EXT;

break;

case FOURCC_DXT3:

format = GL_COMPRESSED_RGBA_S3TC_DXT3_EXT;

break;

case FOURCC_DXT5:

format = GL_COMPRESSED_RGBA_S3TC_DXT5_EXT;

break;

default:

free(buffer);

return 0;

}

Creating the texture is done as usual :

// Create one OpenGL texture

GLuint textureID;

glGenTextures(1, &textureID);

// "Bind" the newly created texture : all future texture functions will modify this texture

glBindTexture(GL_TEXTURE_2D, textureID);

And now, we just have to fill each mipmap one after another :

unsigned int blockSize = (format == GL_COMPRESSED_RGBA_S3TC_DXT1_EXT) ? 8 : 16;

unsigned int offset = 0;

/* load the mipmaps */

for (unsigned int level = 0; level < mipMapCount && (width || height); ++level)

{

unsigned int size = ((width+3)/4)*((height+3)/4)*blockSize;

glCompressedTexImage2D(GL_TEXTURE_2D, level, format, width, height,

0, size, buffer + offset);

offset += size;

width /= 2;

height /= 2;

}

free(buffer);

return textureID;

Inversing the UVs

DXT compression comes from the DirectX world, where the V texture coordinate is inversed compared to OpenGL. So if you use compressed textures, you’ll have to use ( coord.u, 1.0-coord.v) to fetch the correct texel. You can do this whenever you want : in your export script, in your loader, in your shader…

Conclusion

You just learnt to create, load and use textures in OpenGL.

In general, you should only use compressed textures, since they are smaller to store, almost instantaneous to load, and faster to use; the main drawback it that you have to convert your images through The Compressonator (or any similar tool)

Exercices

- The DDS loader is implemented in the source code, but not the texture coordinate modification. Change the code at the appropriate place to display the cube correctly.

- Experiment with the various DDS formats. Do they give different result ? Different compression ratios ?

- Try not to generate mipmaps in The Compressonator. What is the result ? Give 3 different ways to fix this.

References

- Using texture compression in OpenGL , Sébastien Domine, NVIDIA

Tutorial 6 : Keyboard and Mouse

Welcome for our 6th tutorial !

We will now learn how to use the mouse and the keyboard to move the camera just like in a FPS.

The interface

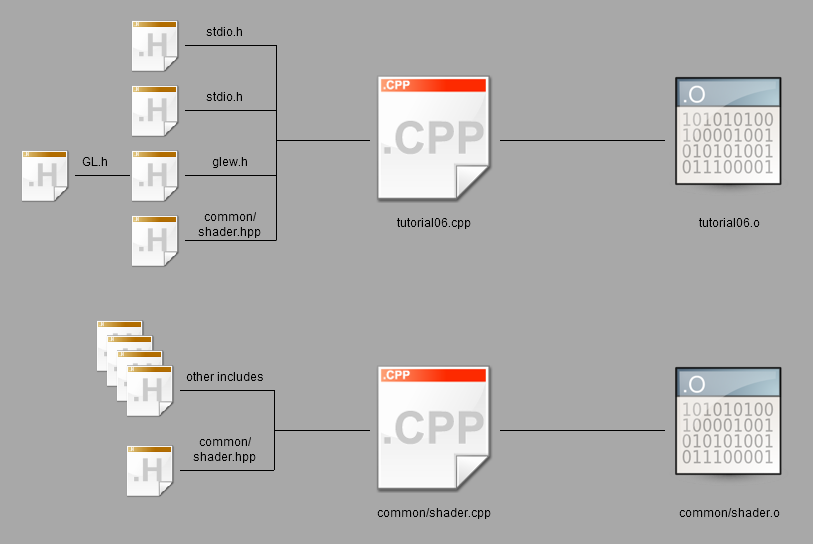

Since this code will be re-used throughout the tutorials, we will put the code in a separate file : common/controls.cpp, and declare the functions in common/controls.hpp so that tutorial06.cpp knows about them.

The code of tutorial06.cpp doesn’t change much from the previous tutorial. The major modification is that instead of computing the MVP matrix once, we now have to do it every frame. So let’s move this code inside the main loop :

do{

// ...

// Compute the MVP matrix from keyboard and mouse input

computeMatricesFromInputs();

glm::mat4 ProjectionMatrix = getProjectionMatrix();

glm::mat4 ViewMatrix = getViewMatrix();

glm::mat4 ModelMatrix = glm::mat4(1.0);

glm::mat4 MVP = ProjectionMatrix * ViewMatrix * ModelMatrix;

// ...

}

This code needs 3 new functions :

- computeMatricesFromInputs() reads the keyboard and mouse and computes the Projection and View matrices. This is where all the magic happens.

- getProjectionMatrix() just returns the computed Projection matrix.

- getViewMatrix() just returns the computed View matrix.

This is just one way to do it, of course. If you don’t like these functions, go ahead and change them.

Let’s see what’s inside controls.cpp.

The actual code

We’ll need a few variables.

// position

glm::vec3 position = glm::vec3( 0, 0, 5 );

// horizontal angle : toward -Z

float horizontalAngle = 3.14f;

// vertical angle : 0, look at the horizon

float verticalAngle = 0.0f;

// Initial Field of View

float initialFoV = 45.0f;

float speed = 3.0f; // 3 units / second

float mouseSpeed = 0.005f;

FoV is the level of zoom. 80° = very wide angle, huge deformations. 60° - 45° : standard. 20° : big zoom.

We will first recompute position, horizontalAngle, verticalAngle and FoV according to the inputs, and then compute the View and Projection matrices from position, horizontalAngle, verticalAngle and FoV.

Orientation

Reading the mouse position is easy :

// Get mouse position

int xpos, ypos;

glfwGetMousePos(&xpos, &ypos);

but we have to take care to put the cursor back to the center of the screen, or it will soon go outside the window and you won’t be able to move anymore.

// Reset mouse position for next frame

glfwSetMousePos(1024/2, 768/2);

Notice that this code assumes that the window is 1024*768, which of course is not necessarily the case. You can use glfwGetWindowSize if you want, too.

We can now compute our viewing angles :

// Compute new orientation

horizontalAngle += mouseSpeed * deltaTime * float(1024/2 - xpos );

verticalAngle += mouseSpeed * deltaTime * float( 768/2 - ypos );

Let’s read this from right to left :

- 1024/2 - xpos means : how far is the mouse from the center of the window ? The bigger this value, the more we want to turn.

- float(…) converts it to a floating-point number so that the multiplication goes well.

- mouseSpeed is just there to speed up or slow down the rotations. Fine-tune this at will, or let the user choose it.

- += : If you didn’t move the mouse, 1024/2-xpos will be 0, and horizontalAngle+=0 doesn’t change horizontalAngle. If you had a “=” instead, you would be forced back to your original orientation each frame, which isn’t good.

We can now compute a vector that represents, in World Space, the direction in which we’re looking

// Direction : Spherical coordinates to Cartesian coordinates conversion

glm::vec3 direction(

cos(verticalAngle) * sin(horizontalAngle),

sin(verticalAngle),

cos(verticalAngle) * cos(horizontalAngle)

);

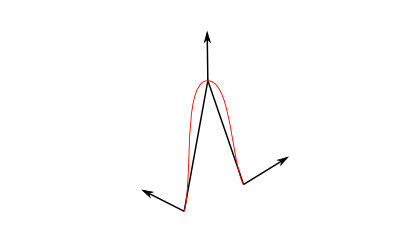

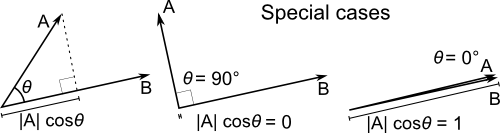

This is a standard computation, but if you don’t know about cosine and sinus, here’s a short explanation :

The formula above is just the generalisation to 3D.

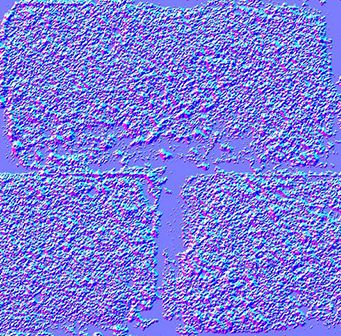

Now we want to compute the “up” vector reliably. Notice that “up” isn’t always towards +Y : if you look down, for instance, the “up” vector will be in fact horizontal. Here is an example of to cameras with the same position, the same target, but a different up:

In our case, the only constant is that the vector goes to the right of the camera is always horizontal. You can check this by putting your arm horizontal, and looking up, down, in any direction. So let’s define the “right” vector : its Y coordinate is 0 since it’s horizontal, and its X and Z coordinates are just like in the figure above, but with the angles rotated by 90°, or Pi/2 radians.

// Right vector

glm::vec3 right = glm::vec3(

sin(horizontalAngle - 3.14f/2.0f),

0,

cos(horizontalAngle - 3.14f/2.0f)

);

We have a “right” vector and a “direction”, or “front” vector. The “up” vector is a vector that is perpendicular to these two. A useful mathematical tool makes this very easy : the cross product.

// Up vector : perpendicular to both direction and right

glm::vec3 up = glm::cross( right, direction );

To remember what the cross product does, it’s very simple. Just recall the Right Hand Rule from Tutorial 3. The first vector is the thumb; the second is the index; and the result is the middle finger. It’s very handy.

Position

The code is pretty straightforward. By the way, I used the up/down/right/left keys instead of the awsd because on my azerty keyboard, awsd is actually zqsd. And it’s also different with qwerZ keyboards, let alone korean keyboards. I don’t even know what layout korean people have, but I guess it’s also different.

// Move forward

if (glfwGetKey( GLFW_KEY_UP ) == GLFW_PRESS){

position += direction * deltaTime * speed;

}

// Move backward

if (glfwGetKey( GLFW_KEY_DOWN ) == GLFW_PRESS){

position -= direction * deltaTime * speed;

}

// Strafe right

if (glfwGetKey( GLFW_KEY_RIGHT ) == GLFW_PRESS){

position += right * deltaTime * speed;

}

// Strafe left

if (glfwGetKey( GLFW_KEY_LEFT ) == GLFW_PRESS){

position -= right * deltaTime * speed;

}

The only special thing here is the deltaTime. You don’t want to move from 1 unit each frame for a simple reason :

- If you have a fast computer, and you run at 60 fps, you’d move of 60*speed units in 1 second

- If you have a slow computer, and you run at 20 fps, you’d move of 20*speed units in 1 second

Since having a better computer is not an excuse for going faster, you have to scale the distance by the “time since the last frame”, or “deltaTime”.

- If you have a fast computer, and you run at 60 fps, you’d move of 1/60 * speed units in 1 frame, so 1*speed in 1 second.

- If you have a slow computer, and you run at 20 fps, you’d move of 1/20 * speed units in 1 second, so 1*speed in 1 second.

which is much better. deltaTime is very simple to compute :

double currentTime = glfwGetTime();

float deltaTime = float(currentTime - lastTime);

Field Of View

For fun, we can also bind the wheel of the mouse to the Field Of View, so that we can have a cheap zoom :

float FoV = initialFoV - 5 * glfwGetMouseWheel();

Computing the matrices

Computing the matrices is now straightforward. We use the exact same functions than before, but with our new parameters.

// Projection matrix : 45° Field of View, 4:3 ratio, display range : 0.1 unit <-> 100 units

ProjectionMatrix = glm::perspective(glm::radians(FoV), 4.0f / 3.0f, 0.1f, 100.0f);

// Camera matrix

ViewMatrix = glm::lookAt(

position, // Camera is here

position+direction, // and looks here : at the same position, plus "direction"

up // Head is up (set to 0,-1,0 to look upside-down)

);

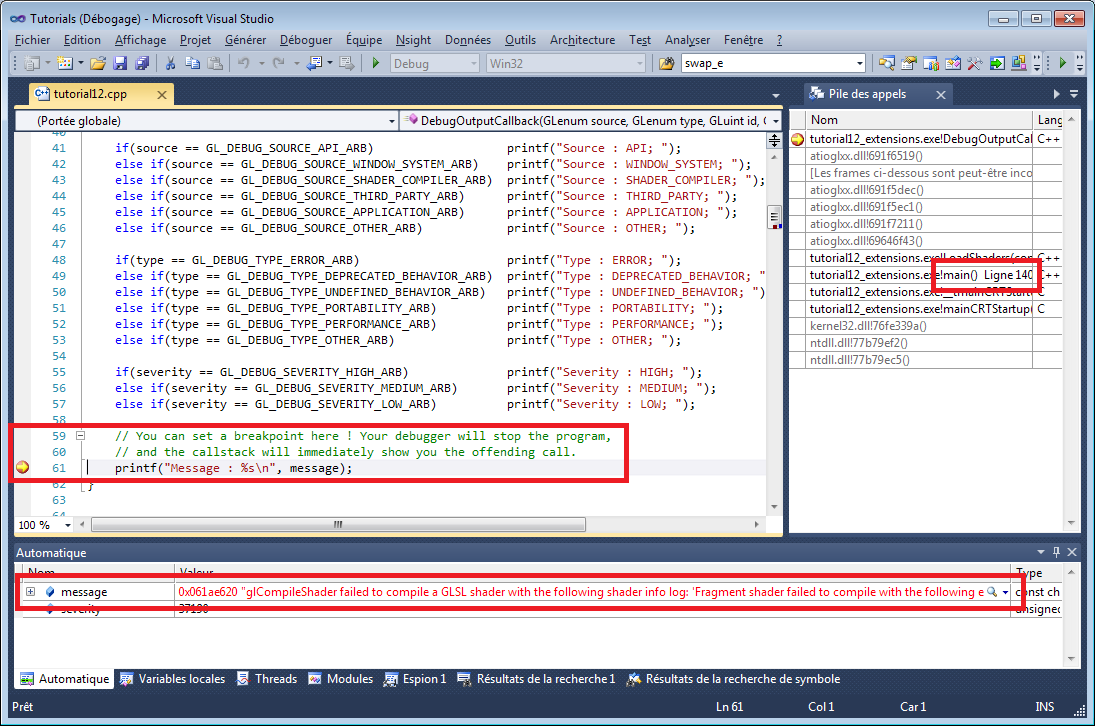

Results

Backface Culling

Now that you can freely move around, you’ll notice that if you go inside the cube, polygons are still displayed. This can seem obvious, but this remark actually opens an opportunity for optimisation. As a matter of fact, in a usual application, you are never inside a cube.

The idea is to let the GPU check if the camera is behind, or in front of, the triangle. If it’s in front, display the triangle; if it’s behind, and the mesh is closed, and we’re not inside the mesh, then there will be another triangle in front of it, and nobody will notice anything, except that everything will be faster : 2 times less triangles on average !

The best thing is that it’s very easy to check this. The GPU computes the normal of the triangle (using the cross product, remember ?) and checks whether this normal is oriented towards the camera or not.

This comes at a cost, unfortunately : the orientation of the triangle is implicit. This means that is you invert two vertices in your buffer, you’ll probably end up with a hole. But it’s generally worth the little additional work. Often, you just have to click “invert normals” in your 3D modeler (which will, in fact, invert vertices, and thus normals) and everything is just fine.

Enabling backface culling is a breeze :

// Cull triangles which normal is not towards the camera

glEnable(GL_CULL_FACE);

Exercices

- Restrict verticalAngle so that you can’t go upside-down

- Create a camera that rotates around the object ( position = ObjectCenter + ( radius * cos(time), height, radius * sin(time) ) ); bind the radius/height/time to the keyboard/mouse, or whatever

- Have fun !

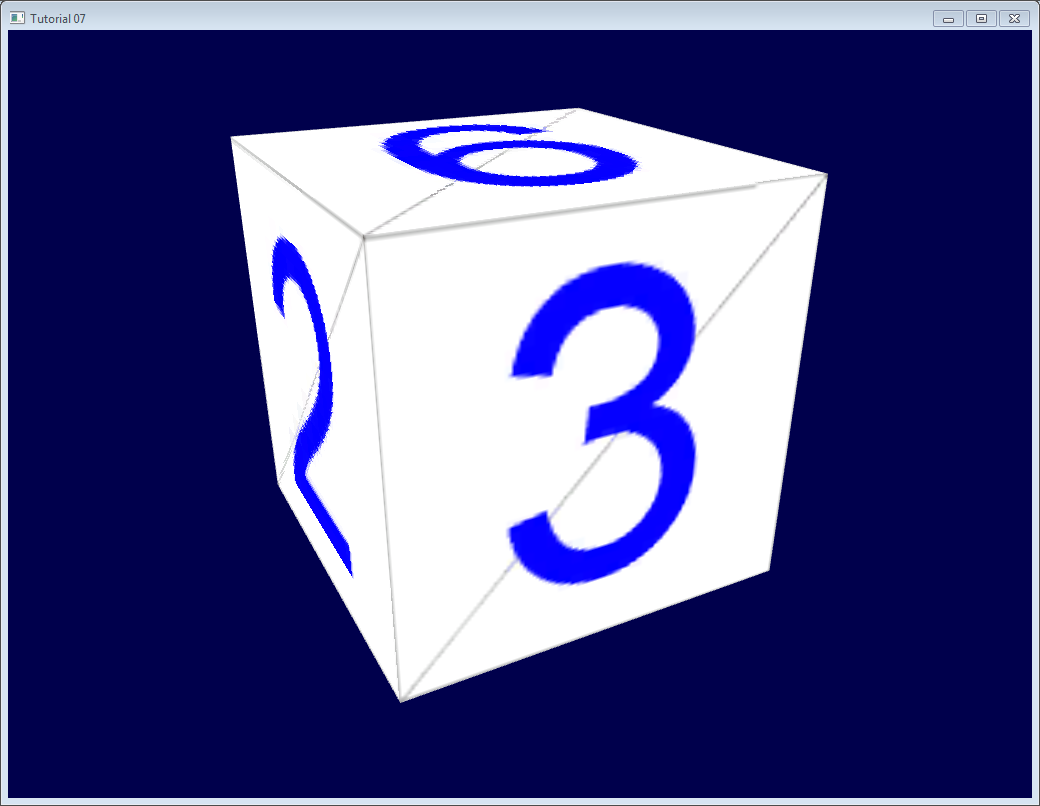

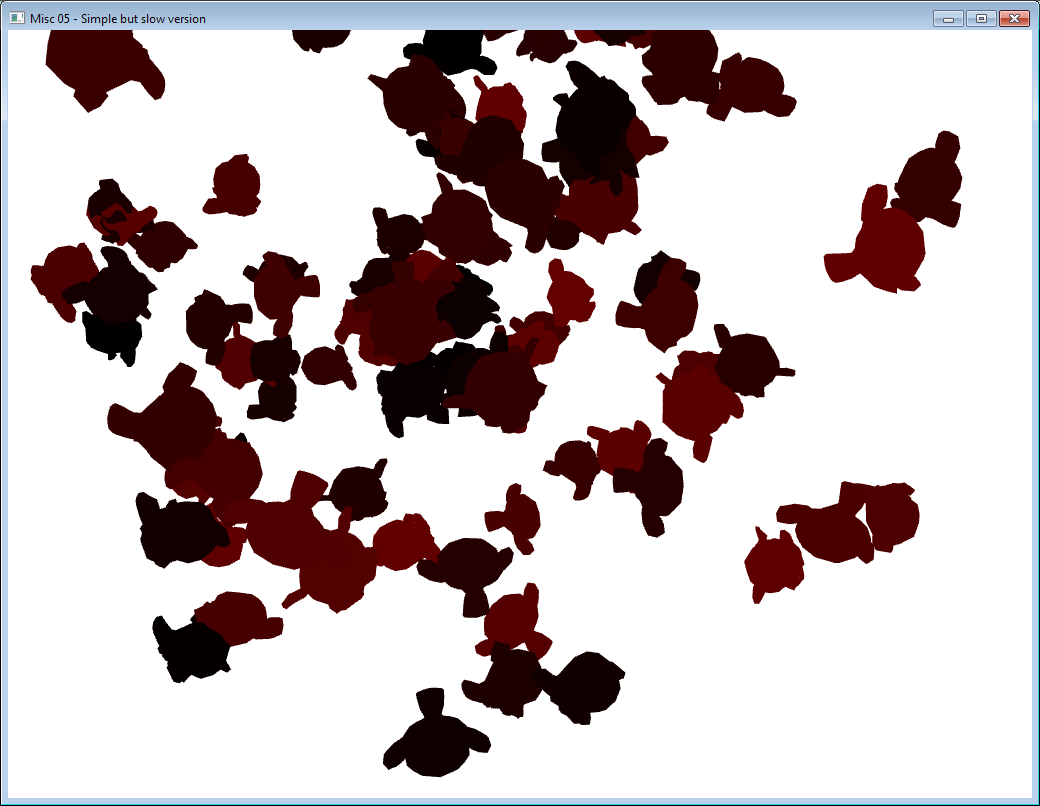

Tutorial 7 : Model loading

Until now, we hardcoded our cube directly in the source code. I’m sure you will agree that this was cumbersome and not very handy.

In this tutorial we will learn how to load 3D meshes from files. We will do this just like we did for the textures : we will write a tiny, very limited loader, and I’ll give you some pointers to actual libraries that can do this better that us.

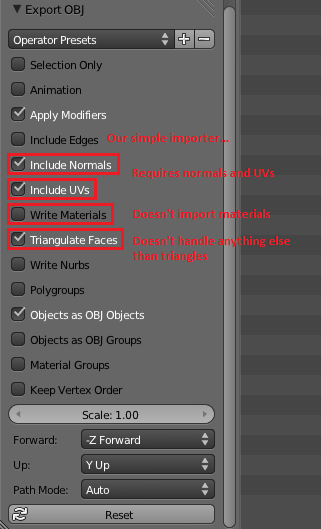

To keep this tutorial as simple as possible, we’ll use the OBJ file format, which is both very simple and very common. And once again, to keep things simple, we will only deal with OBJ files with 1 UV coordinate and 1 normal per vertex (you don’t have to know what a normal is right now).

Loading the OBJ

Our function, located in common/objloader.cpp and declared in common/objloader.hpp, will have the following signature :

bool loadOBJ(

const char * path,

std::vector < glm::vec3 > & out_vertices,

std::vector < glm::vec2 > & out_uvs,

std::vector < glm::vec3 > & out_normals

)

We want loadOBJ to read the file “path”, write the data in out_vertices/out_uvs/out_normals, and return false if something went wrong. std::vector is the C++ way to declare an array of glm::vec3 which size can be modified at will: it has nothing to do with a mathematical vector. Just an array, really. And finally, the & means that function will be able to modify the std::vectors.

Example OBJ file

An OBJ file looks more or less like this :

# Blender3D v249 OBJ File: untitled.blend

# www.blender3d.org

mtllib cube.mtl

v 1.000000 -1.000000 -1.000000

v 1.000000 -1.000000 1.000000

v -1.000000 -1.000000 1.000000

v -1.000000 -1.000000 -1.000000

v 1.000000 1.000000 -1.000000

v 0.999999 1.000000 1.000001

v -1.000000 1.000000 1.000000

v -1.000000 1.000000 -1.000000

vt 0.748573 0.750412

vt 0.749279 0.501284

vt 0.999110 0.501077

vt 0.999455 0.750380

vt 0.250471 0.500702

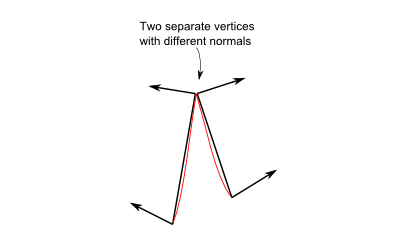

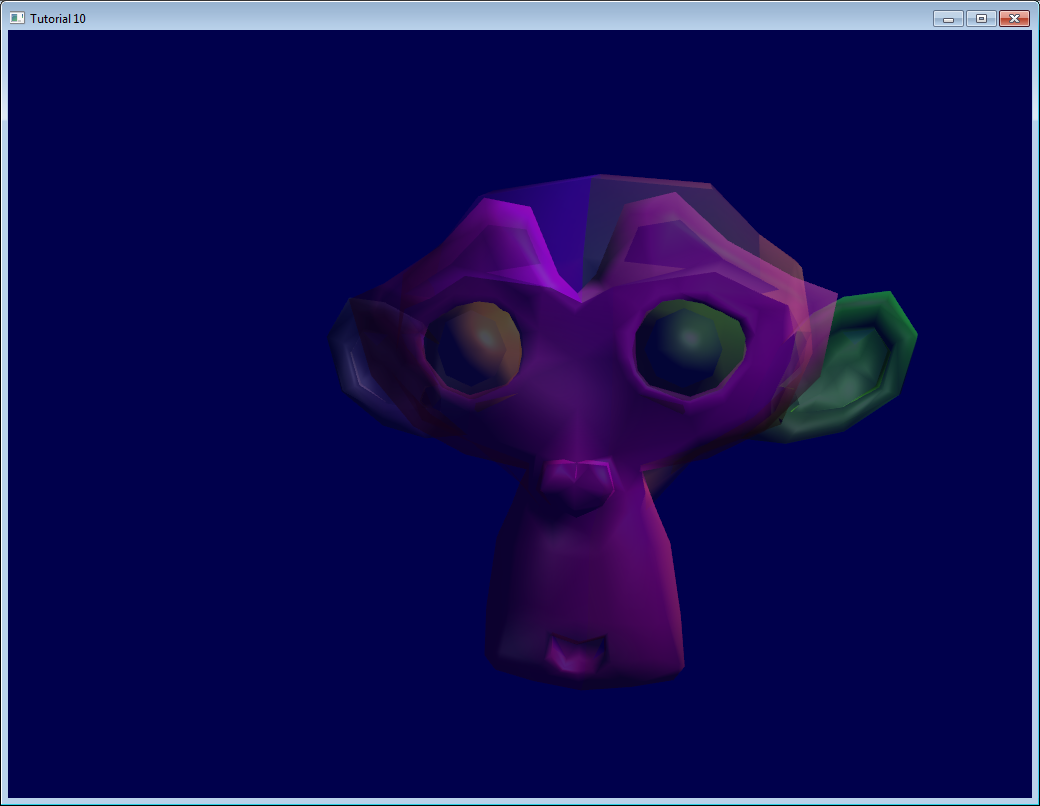

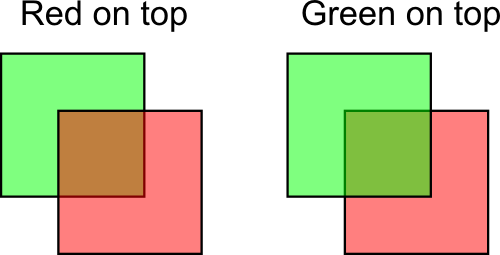

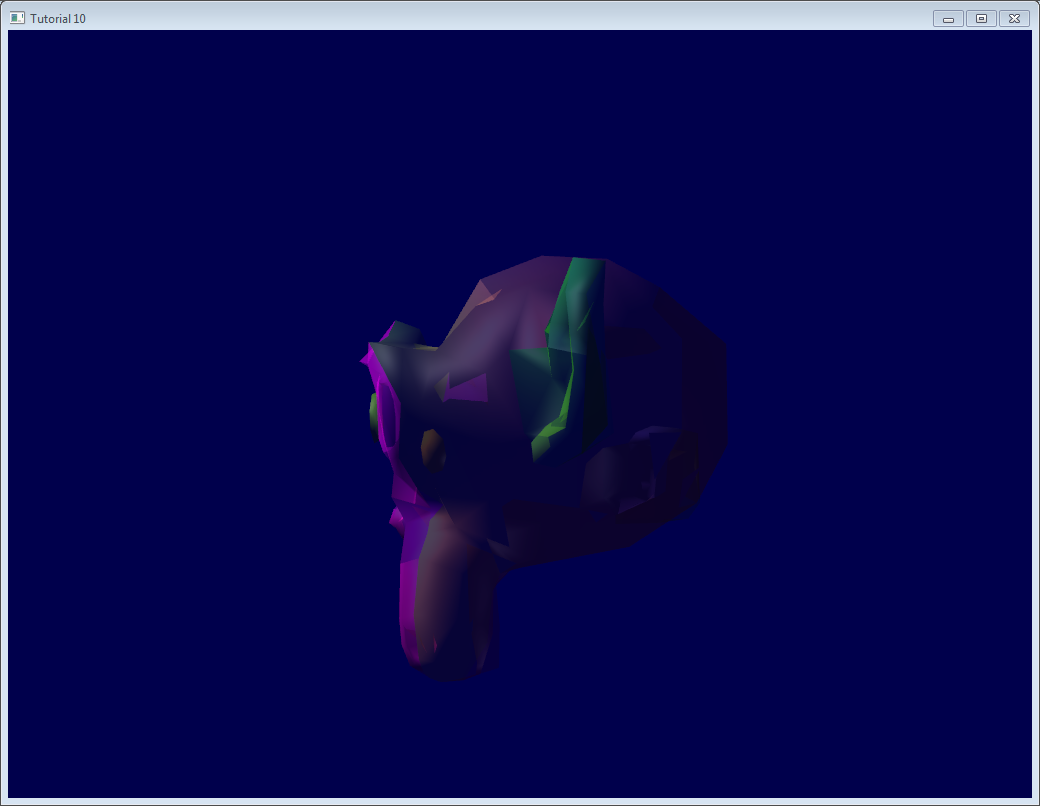

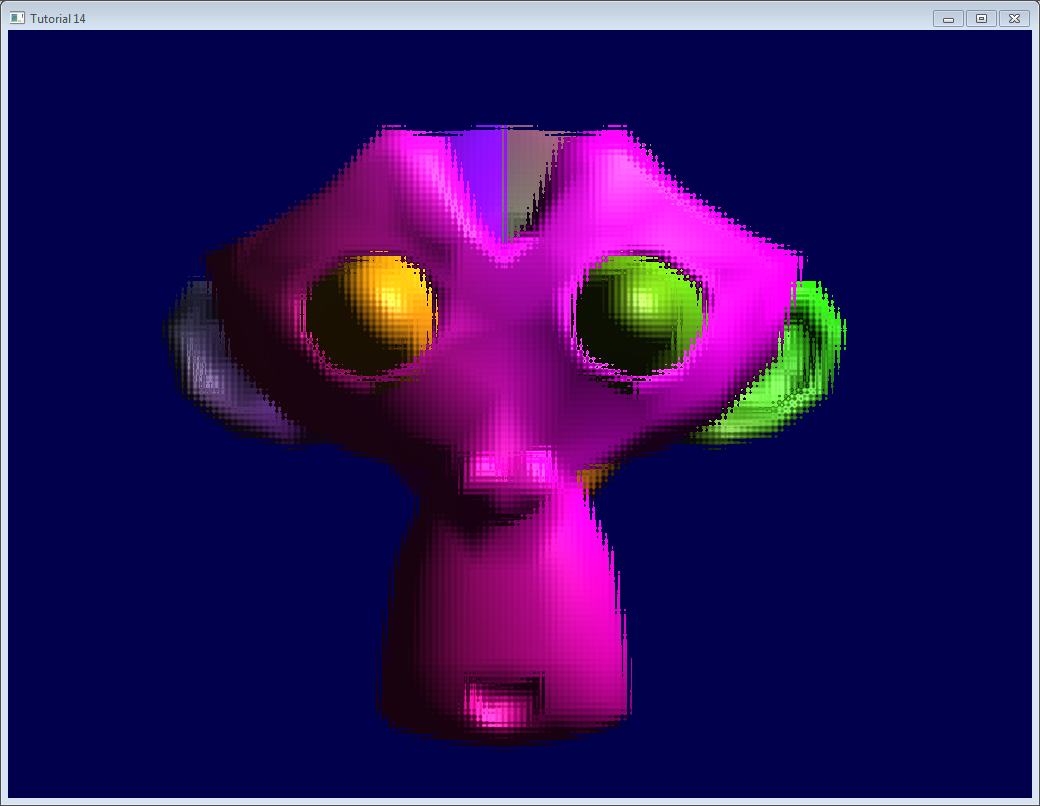

vt 0.249682 0.749677